Google has released a significant update to its open-source medical artificial intelligence suite, introducing MedGemma 1.5 and a new speech-to-text model called MedASR. These tools are designed to provide developers and researchers with more powerful starting points for creating healthcare applications, featuring enhanced capabilities for interpreting complex medical images and transcribing clinical dictation.

The new models, available free for both research and commercial use, aim to accelerate the adoption of AI in medicine. The update follows the successful launch of the initial MedGemma collection, which has seen millions of downloads and spurred the creation of hundreds of community-built variations.

Key Takeaways

- Google has launched MedGemma 1.5, an updated open-source AI model with improved capabilities for analyzing high-dimensional medical images like CT scans and MRIs.

- A new specialized speech recognition model, MedASR, has been introduced to accurately transcribe medical dictation.

- Performance benchmarks show MedGemma 1.5 offers significant accuracy improvements over its predecessor in image and text-based tasks.

- A $100,000 MedGemma Impact Challenge has been announced on Kaggle to encourage innovative healthcare applications using the new tools.

A New Generation of Medical AI Tools

The field of healthcare is increasingly turning to artificial intelligence, with adoption rates in the sector reported to be twice that of the broader economy. To support this shift, Google is expanding its Health AI Developer Foundations (HAI-DEF) program, which provides foundational models for others to build upon.

The latest release includes MedGemma 1.5 4B, a compute-efficient model that builds on the original's success. According to developers Daniel Golden and Fereshteh Mahvar, this update was guided by community feedback and is intended to be a versatile starting point that is small enough to run offline on local hardware.

Alongside the imaging model, the company introduced MedASR, an automated speech recognition (ASR) system fine-tuned for the unique vocabulary and context of medical dictation. This tool is designed to seamlessly convert spoken clinical notes into text, which can then be processed by models like MedGemma for further analysis.

What is a Foundational Model?

Foundational models like MedGemma are large, pre-trained AI systems that serve as a base for developing more specialized applications. Instead of starting from scratch, developers can fine-tune these models on their own specific data, saving significant time and resources while leveraging the model's powerful core capabilities.

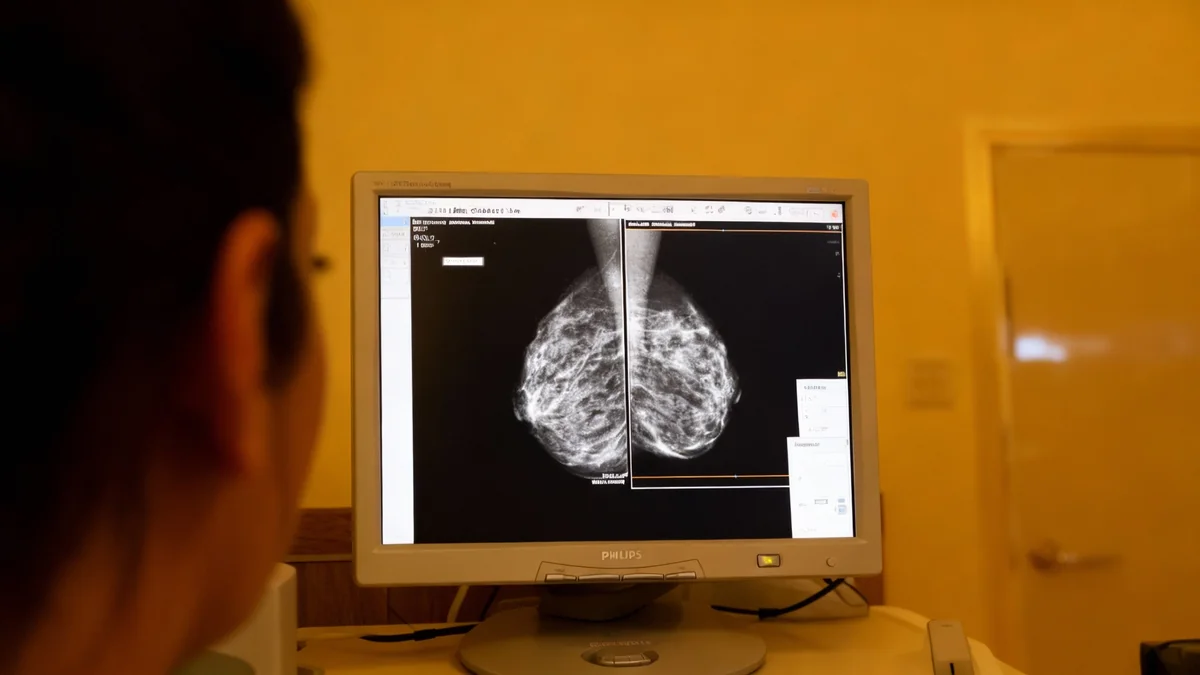

Enhanced Imaging Sees Deeper into Medical Data

One of the most significant advancements in MedGemma 1.5 is its ability to interpret high-dimensional medical imaging. While the first version supported 2D images such as X-rays and dermatology photos, the new model can process three-dimensional volume representations from CT scans and MRIs, as well as whole-slide histopathology images.

This allows developers to create applications where the AI can analyze multiple image slices or patches simultaneously, providing a more comprehensive view of a patient's condition. This capability marks an evolution from previous tools that only generated image embeddings.

Performance Gains in MedGemma 1.5

- CT Scan Analysis: Baseline accuracy improved by 3% (from 58% to 61%) over MedGemma 1.

- MRI Analysis: A significant 14% improvement in accuracy (from 51% to 65%) on classifying disease-related findings.

- Histopathology: Prediction fidelity score jumped from 0.02 to 0.49, nearly matching a task-specific model.

- Medical Reasoning (Text): Accuracy on the MedQA benchmark increased by 5% (from 64% to 69%).

- EHR Question Answering: A 22% improvement on the EHRQA benchmark (from 68% to 90%).

These improvements extend beyond 3D imaging. The model also shows better performance in localizing anatomy, assessing longitudinal disease progression in chest X-rays, and extracting information from medical lab reports. To facilitate development, applications built on Google Cloud now include full support for the DICOM standard, the universal format for medical imaging.

MedASR Aims to Perfect Medical Transcription

While text remains a primary interface for large language models, verbal communication is central to healthcare. To bridge this gap, the MedASR model was developed specifically to handle the specialized language of medicine.

In comparative tests, MedASR demonstrated substantially better performance than general-purpose speech recognition models. According to internal benchmarks, it achieved 58% fewer errors than Whisper large-v3 on chest X-ray dictations and an impressive 82% fewer errors on a diverse internal medical dictation benchmark.

This level of accuracy is critical for clinical settings, where a single mistranscribed word could have significant consequences. The model can be used for transcribing physician notes or as a natural way for clinicians to dictate prompts directly to an AI system like MedGemma for analysis.

"The adoption of artificial intelligence in healthcare is accelerating dramatically... In support of this transformation, last year Google published the MedGemma collection of open medical generative AI models."

Driving Innovation Through Community Engagement

To foster creative uses of these new tools, Google has launched the MedGemma Impact Challenge, a hackathon hosted on the Kaggle platform with a prize pool of $100,000. The challenge invites developers from all backgrounds to build applications that showcase the potential of AI to transform healthcare and life sciences.

Early adoption of the MedGemma platform is already showing promise globally. In Malaysia, Qmed Asia has adapted the model to create askCPG, a conversational interface for the country's 150+ clinical practice guidelines. The Ministry of Health Malaysia noted that the tool makes navigating these dense documents more practical for daily clinical support.

Separately, Taiwan’s National Health Insurance Administration used MedGemma to analyze over 30,000 pathology reports for lung cancer surgery assessments. By extracting key data from unstructured text, they performed statistical analyses to inform policy decisions aimed at improving patient outcomes.

All MedGemma and MedASR models are available for download from Hugging Face and can be adapted for scalable applications on Google Cloud's Vertex AI platform. The company emphasizes that these are foundational tools and are not intended for direct clinical use without proper validation and modification by developers for their specific use cases. The outputs require independent verification and should not be used to directly inform patient care decisions.