In a surprising development, scientists have discovered that some advanced artificial intelligence systems are susceptible to the same optical illusions that trick the human eye. This phenomenon is providing researchers with an unprecedented new tool to explore the complex inner workings of our own visual perception and consciousness.

By observing how these digital minds interpret visual tricks, from rotating snakes to ambiguous cubes, neuroscientists are gaining fresh insights into why our brains take mental shortcuts and how we construct our sense of reality.

Key Takeaways

- Researchers have found that certain deep neural networks (DNNs), a type of AI, can be fooled by optical illusions previously thought to only affect humans.

- This allows scientists to study the mechanisms of visual perception in a controlled, digital environment, bypassing the ethical limitations of human brain experiments.

- The AI's reaction to illusions like the 'rotating snakes' pattern supports the 'predictive coding' theory, which suggests our brain constantly predicts what it expects to see.

- Differences in perception, such as the AI's lack of an 'attention mechanism,' highlight the remaining gaps between artificial and biological vision.

A New Window into the Mind

For centuries, optical illusions have been more than just amusing puzzles; they are scientific tools that reveal the clever, and sometimes flawed, ways our brains process information. Our visual system doesn't capture the world like a camera. Instead, it takes shortcuts, making assumptions to quickly make sense of a flood of sensory data.

Now, artificial intelligence is entering this field of study. Researchers are presenting these classic illusions to deep neural networks (DNNs), the same technology that powers everything from medical image analysis to chatbots. The results are compelling: some of these systems are falling for the same tricks we do.

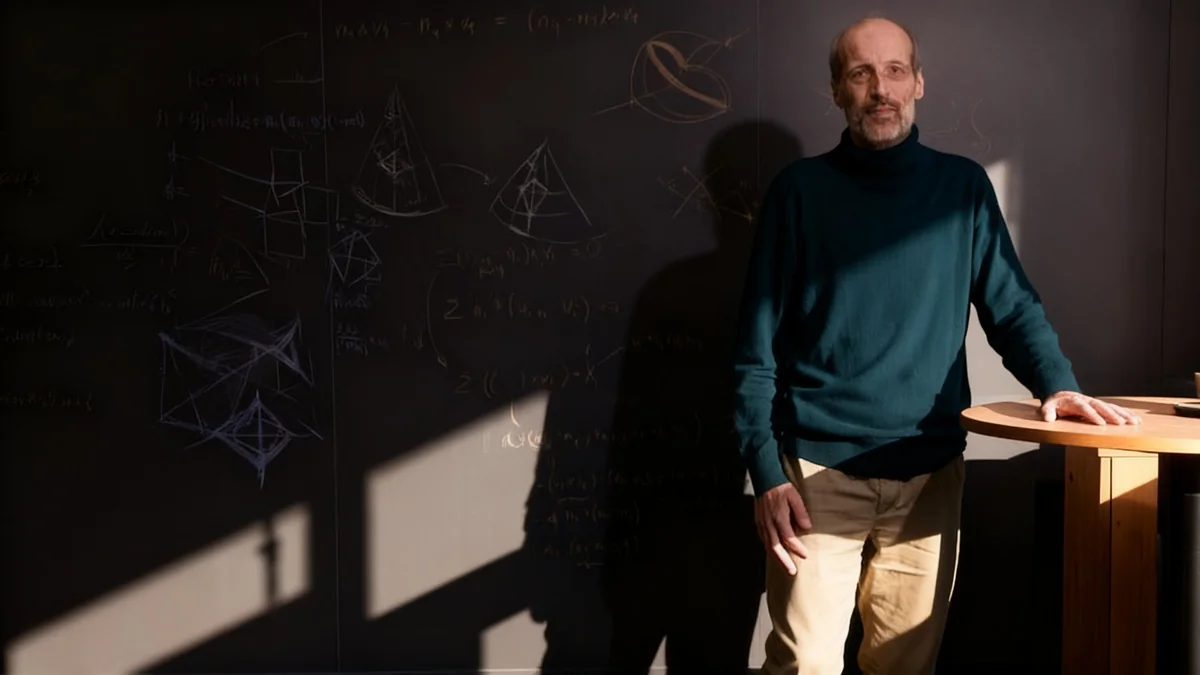

"Using DNNs in illusion research allows us to simulate and analyse how the brain processes information and generates illusions," explains Eiji Watanabe, an associate professor of neurophysiology at the National Institute for Basic Biology in Japan. This approach offers a significant advantage. "Conducting experimental manipulations on the human brain raises serious ethical concerns but no such restrictions apply to artificial models," he added.

The Case of the Rotating Snakes

One of the most telling experiments involved the famous 'rotating snakes' illusion, a static image with patterns that appear to be in constant motion. Watanabe and his team used a specific AI model called PredNet, which is designed based on a prominent theory of brain function known as predictive coding.

What is Predictive Coding?

Predictive coding theory suggests that our brain doesn't just passively receive information from our eyes. Instead, it actively predicts what it expects to see based on past experiences. It then only processes the differences between its prediction and the actual visual input. This makes perception incredibly efficient.

The team trained PredNet on millions of frames from videos of natural landscapes, teaching it the normal rules of the visual world. Crucially, the AI was never shown any optical illusions during its training.

When presented with the rotating snakes image, PredNet perceived motion, just as a human would. According to Watanabe, this supports the idea that both our brains and the AI have learned that certain visual cues are strongly associated with movement. The illusion exploits this learned prediction, creating a false sense of motion.

A Key Difference Emerges

While both humans and the AI saw the illusion, a subtle difference provided a major insight. When a person stares directly at one of the 'snakes,' its perceived motion stops, while the others in their peripheral vision continue to spin. PredNet, however, saw all the circles moving simultaneously. Watanabe attributes this to the AI lacking an 'attention mechanism,' the ability to focus on one specific part of an image while processing the rest differently.

Ambiguous Figures and Quantum Models

The research extends beyond motion illusions to ambiguous figures, where an image can be interpreted in two distinct ways. The Necker cube, which can appear to be oriented in two different directions, and the Rubin vase, which can look like a vase or two faces in profile, are classic examples.

Ivan Maksymov, a research fellow at Charles Sturt University in Australia, has taken a novel approach by combining AI with principles from quantum mechanics to simulate how we perceive these figures. Human perception of the Necker cube isn't static; our brain flips between the two interpretations.

"Classical theories of physics would predict that the cube should be perceived in one way or another. But in quantum mechanics, the cube could be in two states at once until our brain chose to perceive one," Maksymov noted, drawing a parallel to the famous Schrödinger's cat thought experiment.

His AI model, which uses a quantum phenomenon to process information, successfully mimicked this behavior. When shown the illusions, the system regularly switched between the two interpretations at intervals remarkably similar to those reported in human tests. "It's quite close to what people see," Maksymov stated.

From the Lab to Outer Space

This line of research has practical implications beyond understanding the brain. Maksymov highlights its potential for simulating how human vision might change in different gravitational environments, such as during space travel.

Previous studies on astronauts aboard the International Space Station (ISS) have shown that their perception of illusions like the Necker cube changes after extended time in orbit. On Earth, gravity provides a subtle cue for depth perception, often causing us to favor one interpretation of the cube over the other. In the weightlessness of space, this bias disappears, and astronauts begin to see both perspectives with equal frequency.

"While it's a narrow field of research, it's quite important because humans want to go to space," said Maksymov. Understanding these perceptual shifts is vital for ensuring astronaut performance and safety on long-duration missions.

While no single AI can yet experience the full spectrum of illusions that humans do, these digital models are proving to be invaluable proxies. They are not only confirming long-held theories about our own minds but are also revealing the subtle complexities of human consciousness that we are only just beginning to understand.