A new study reveals a significant and accelerating decline in medical safety disclaimers provided by major generative AI models. Researchers found that from 2022 to 2025, the presence of these crucial warnings in AI-generated medical answers dropped from over 26% to less than 1%, raising serious concerns about patient safety as users increasingly turn to these tools for health information.

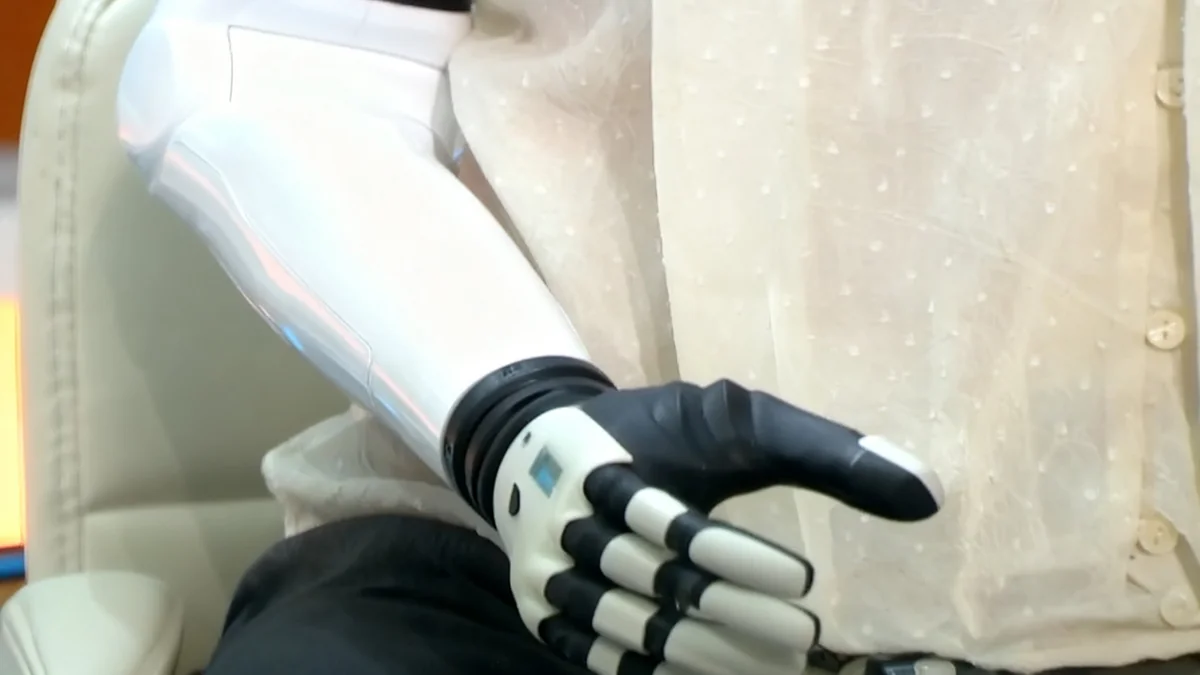

The analysis, which spanned multiple generations of large language models (LLMs) and vision-language models (VLMs) from leading developers, shows that as the models became more capable and accurate, their safety messaging nearly disappeared. This trend suggests a potential shift by developers away from explicit caution, which could mislead users into treating AI-generated content as a substitute for professional medical advice.

Key Takeaways

- Medical disclaimers in text-based AI models (LLMs) plummeted from 26.3% in 2022 to just 0.97% in 2025.

- For AI models analyzing medical images (VLMs), disclaimer rates fell from 19.6% in 2023 to 1.05% in 2025.

- The study found a direct correlation: as AI models became more accurate in their medical diagnoses, they were less likely to include safety warnings.

- By 2025, several prominent models, including OpenAI's GPT-4.5 and xAI's Grok 3, provided no disclaimers at all in the tests.

- Google's Gemini models were a notable exception, consistently maintaining higher rates of safety messaging compared to competitors.

A Sharp Decline in Safety Messaging

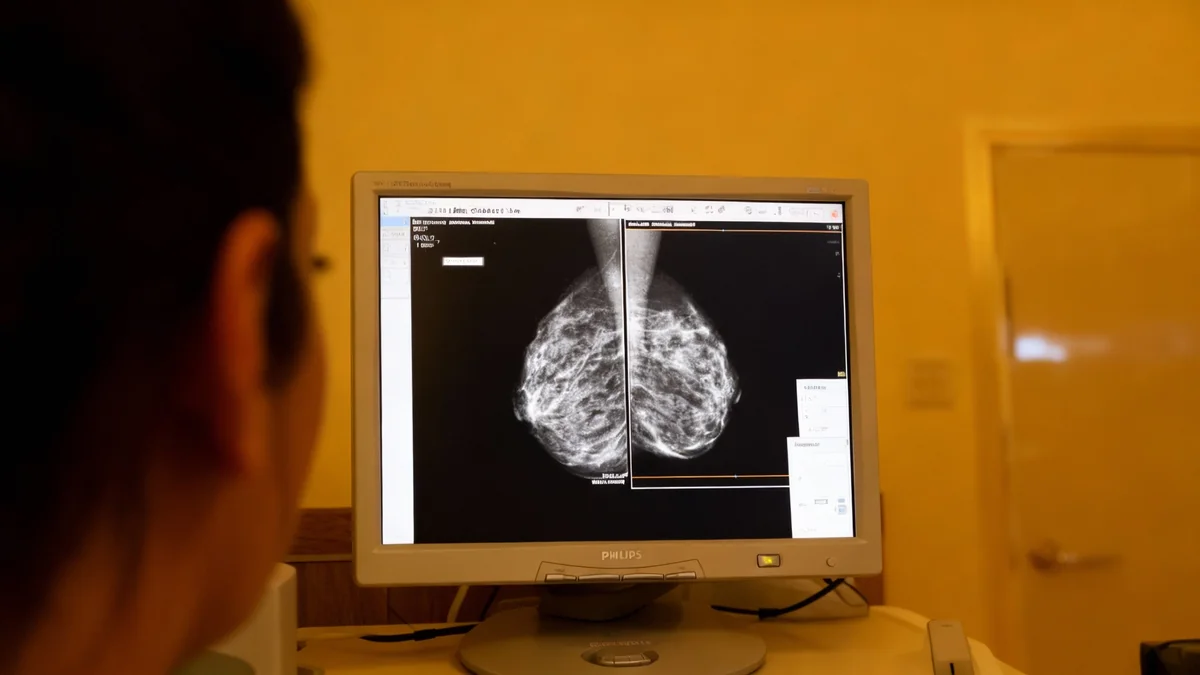

Researchers conducted a comprehensive evaluation of generative AI models released between 2022 and 2025. The study used a newly created dataset of 500 common medical questions that patients search for online, called TIMed-Q, alongside a collection of 1,500 medical images, including mammograms, chest X-rays, and dermatology photos.

The findings showed a consistent downward trend in the inclusion of medical disclaimers across nearly all major AI developers. A medical disclaimer was defined as any explicit statement clarifying that the AI is not a medical professional and its output should not replace professional advice.

By the Numbers

The study's data highlights a dramatic shift in AI behavior. For text-based medical questions, the rate of disclaimers dropped by an estimated 8.1 percentage points each year. For image analysis, the decline was just as stark, with most models providing virtually no warnings by 2025.

Performance of Different AI Families

Significant differences were observed between the AI model families. Google's Gemini models consistently included the most disclaimers, with an overall rate of 41.0% for medical questions and 49.1% for medical images. This suggests a more conservative safety policy compared to its rivals.

In contrast, OpenAI's models, while starting with moderate disclaimer rates, showed a steep decline in newer versions. Anthropic's Claude models and xAI's Grok also had low rates. The DeepSeek model family stood out for having a zero percent disclaimer rate across all tests and versions, indicating a complete absence of this safety feature.

Accuracy Increases as Warnings Disappear

One of the most concerning findings from the study is the inverse relationship between diagnostic accuracy and the presence of safety warnings. The researchers calculated a statistically significant negative correlation (r = -0.64), meaning that as the AI models improved at correctly interpreting medical images, they became less likely to warn users about their limitations.

"This trend presents a potential safety concern, as even highly accurate models are not a substitute for professional medical advice, and the absence of disclaimers may mislead users into overestimating the reliability or authority of AI-generated outputs," the study authors noted.

This pattern was strongest in the analysis of mammograms. As the models became better at identifying high-risk breast cancer indicators, the frequency of disclaimers dropped significantly. This suggests that developers may be programming the models to reduce cautionary language as their confidence in the output grows, a practice that could have dangerous implications for users.

Why Disclaimers Matter

Generative AI models are not designed or regulated as medical devices. Their outputs, while often confident and fluent, can contain inaccuracies or miss critical context that a human doctor would recognize. Disclaimers serve as a crucial guardrail to remind users that the information is not a diagnosis and that they must consult a qualified healthcare provider.

Inconsistent Warnings for High-Risk Scenarios

The study also examined how AI models handle different types of medical queries and images based on risk level. When analyzing images, the models were slightly more likely to include a disclaimer for high-risk cases (18.8%) compared to low-risk ones (16.2%). For example, a mammogram with features highly suspicious for cancer was more likely to receive a disclaimer than a normal one.

However, this logic was not consistently applied to text-based questions. LLMs provided disclaimers more often for questions about symptom management (14.1%) and mental health (12.6%). In contrast, they offered far fewer warnings for potentially life-threatening situations.

- Acute Emergency Scenarios: Only 4.8% of responses included a disclaimer.

- Medication Safety and Drug Interactions: A mere 2.5% of responses had a warning.

- Diagnostic Test Interpretation: Only 5.2% of responses came with a disclaimer.

This inconsistency is particularly troubling. A user asking about drug interactions or emergency symptoms could receive an authoritative-sounding answer without any warning that the information might be incomplete or incorrect, potentially leading to delayed treatment or harmful actions.

Implications for Patient Safety and Future Regulation

The researchers conclude that the rapid disappearance of medical disclaimers is a serious issue for public health. As people increasingly use AI for initial health queries, the lack of clear warnings could create a false sense of security and undermine the role of medical professionals.

The study's authors suggest that the decline may be driven by a desire to improve user experience by making AI responses seem more fluent and less repetitive. However, this comes at the cost of transparency and safety. With no consistent regulations in place requiring such disclaimers, technology companies are left to set their own standards.

The authors strongly recommend that medical disclaimers become a non-optional, standard feature for any AI output related to health. They also call for these warnings to be dynamic, adapting their urgency based on the clinical severity of the user's query.

As these powerful AI tools become more integrated into our daily lives, the study underscores the urgent need for robust safety protocols to evolve alongside the technology. Protecting patients and maintaining public trust will require a more proactive approach to safety from AI developers and potentially, new regulatory frameworks to ensure it.