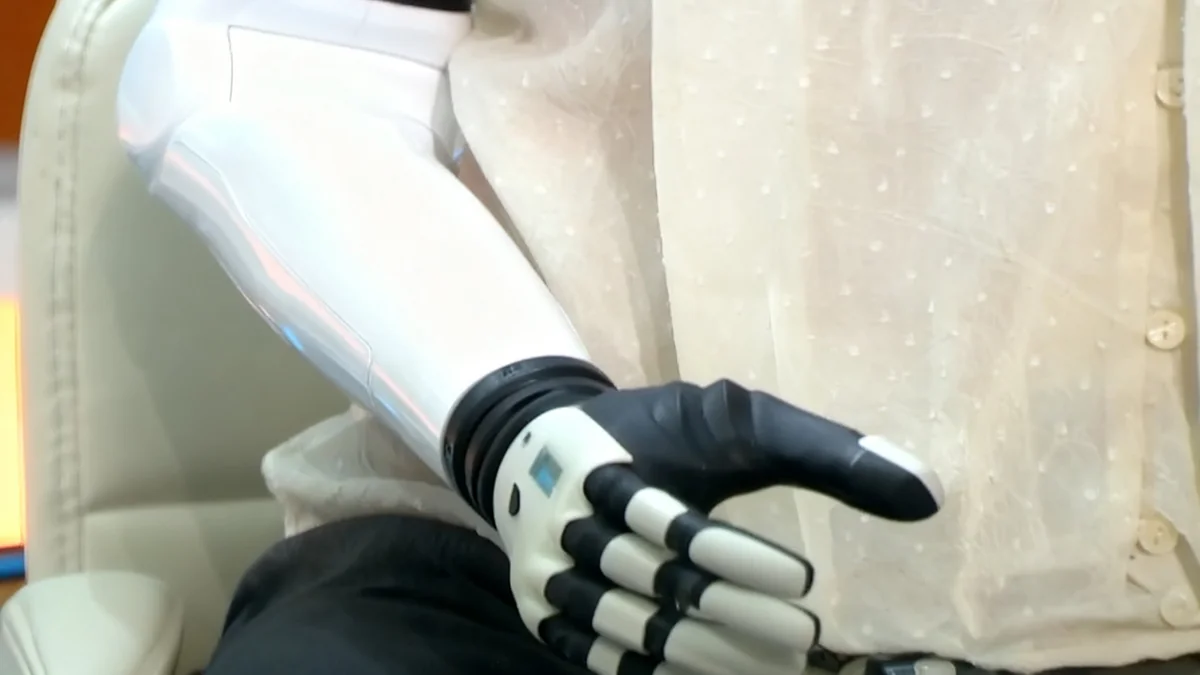

Researchers are exploring the use of artificial intelligence to create digital 'clones' that could help make life-or-death medical decisions for patients who cannot communicate. A project at the University of Washington is in its early stages, but physicians and bioethicists are raising significant ethical questions about the technology's accuracy, potential for bias, and its role alongside human family members.

Key Takeaways

- Researchers at UW Medicine are developing AI models, known as 'AI surrogates,' to predict the end-of-life care preferences of incapacitated patients.

- The project is currently in a conceptual phase, using historical patient data, with no active patient involvement.

- Medical experts express concern that AI cannot capture the complex, context-dependent nature of human values and that patient preferences often change over time.

- A major worry is the potential misuse of this technology for patients without family, where the AI's accuracy and fairness cannot be verified.

- There is a consensus among experts that AI should only serve as a supplementary tool and must never replace human decision-makers like family members.

A New Frontier in Medical AI

The question of how to honor the wishes of a patient who can no longer speak for themselves is one of the most difficult challenges in medicine. For years, researchers have considered whether artificial intelligence could provide a solution. Now, this theoretical concept is moving closer to reality.

Muhammad Aurangzeb Ahmad, a resident fellow at the University of Washington’s UW Medicine, is leading a project at Harborview Medical Center in Seattle to develop AI surrogates. The goal is to create predictive models that can assist doctors and families in making choices that align with a patient's values.

"This is very brand new, so very few people are working on it," Ahmad stated, highlighting the pioneering nature of the research. He emphasized that the project is still in its "conceptual phase" and has not yet involved any patients.

The Current State of Research

Ahmad's initial work focuses on analyzing retrospective data already collected by the hospital. This includes information such as injury severity, medical history, previous treatment choices, and demographic details. The aim is to train a machine learning model to see how well it can predict past outcomes.

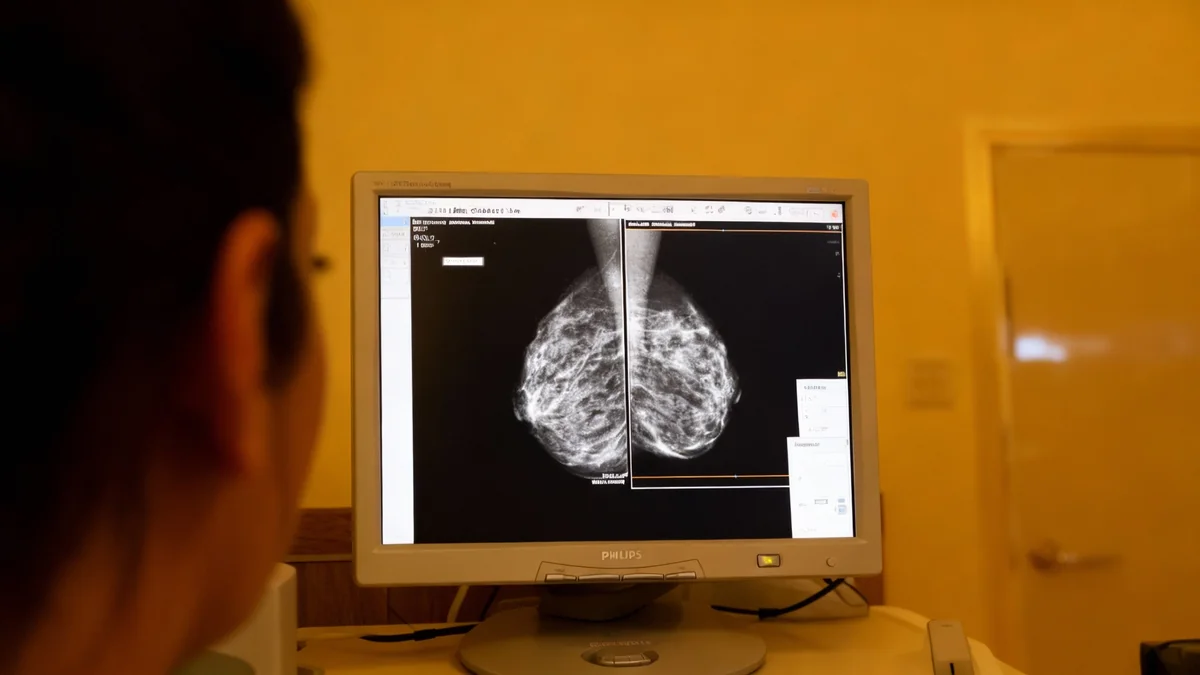

The primary method for testing accuracy involves checking the model's predictions against the stated wishes of patients who eventually recover. Ahmad hopes to develop a system that can accurately predict patient preferences about "two-thirds" of the time. However, he acknowledges this is just the first step in a long process.

UW Medicine confirmed the project's early stage. Spokesperson Susan Gregg said there is "considerable work to complete prior to launch" and any system "would be approved only after a multiple-stage review process."

The Vision for Future AI Surrogates

In the future, Ahmad envisions more sophisticated models. These could analyze textual data from recorded conversations between patients and doctors or even communications with family members. In its most ideal form, patients might interact with an AI throughout their lives, continually refining the model to reflect their evolving values.

Significant Ethical and Practical Hurdles

While the technology is innovative, many medical professionals are cautious. They point to fundamental challenges that an algorithm may never be able to overcome. End-of-life decisions are not simple binary choices; they are deeply personal and highly dependent on context.

"Decisions about hypothetical scenarios do not correlate with decisions that need to be made in real time. AI cannot fix this fundamental issue—it is not a matter of better prediction."– R. Sean Morrison, Mount Sinai

Dr. Emily Moin, an intensive care physician, explained that patient preferences are not static. Someone's wishes can change, sometimes within days, or after experiencing a life-saving treatment. "These decisions are dynamically constructed and context-dependent," she said.

The Problem of 'Ground Truth'

A key criticism focuses on how an AI model's accuracy is measured. Verifying a model by asking a recovered patient what they would have wanted is flawed, according to Moin. A person's perspective after survival is fundamentally different from their perspective before a critical event.

Moin is also concerned about the most vulnerable patients. "I imagine that they would actually want to deploy this model to help to make decisions for unrepresented patients, patients who can’t communicate, patients who don’t have a surrogate," she explained. For these individuals, "you’ll never be able to know what the so-called ground truth is, and then you’ll never be able to assess your bias."

A Study on AI Prediction Accuracy

A proof-of-concept study co-authored by Georg Starke showed that AI models could predict patient CPR preferences with up to 70% accuracy using survey data. However, Starke's team emphasized that accuracy alone is not enough and that human surrogates remain essential for understanding context.

The Role of Human Connection

Doctors stress that conversations between patients, families, and medical staff are irreplaceable. Dr. Moin worries that reliance on an AI tool could discourage these vital discussions, similar to how filling out an advanced directive can sometimes lead patients to talk less with their loved ones about their wishes.

There is a fear that overworked clinicians and stressed family members might default to the "shortcut" of an AI's recommendation. "I do worry that there could be culture shifts and other pressures that would encourage clinicians and family members... to lean on products like these more heavily," Moin said.

AI as an Aid, Not an Authority

Most experts, including the researchers developing the technology, agree that AI surrogates should never replace human judgment. Teva Brender, a hospitalist in San Francisco, suggested AI could serve as a "launchpad" for discussions, helping a family consider factors they might have overlooked.

However, he cautioned that this requires transparency. "If a black-box algorithm says that grandmother would not want resuscitation, I don’t know that that’s helpful," Brender said. "You need it to be explainable." Without understanding how the AI reached its conclusion, it could sow distrust rather than provide clarity.

Navigating Bias and Fairness

Ahmad is actively considering the complex issue of fairness. His research explores how to build a model that respects a patient's unique moral framework, which could be influenced by religion, family duty, or a focus on personal autonomy.

"The central question becomes not only, ‘Is the model unbiased?’ but ‘Whose moral universe does the model inhabit?'" Ahmad wrote in a paper on the topic. Treating two patients with different value systems "similarly" in algorithmic terms could amount to "moral erasure."

This challenge is immense. An AI would need to interpret nuanced human values from data, a task far more complex than identifying clinical patterns. Ahmad acknowledges that it could be more than a decade before such technology is ready for any clinical application, if ever.

Advanced Directives vs. AI Surrogates

For decades, health systems have encouraged patients to complete advanced directives to document their end-of-life wishes. However, studies show these preferences can be unstable. AI surrogates are being explored as a more dynamic alternative, but they face their own set of complex ethical and practical challenges that written directives do not.

The Path Forward

The consensus among medical and ethics professionals is clear: proceed with extreme caution. The development of AI surrogates requires extensive research, rigorous testing, and a broad public debate before it can even be considered for clinical settings.

Ahmad plans to test his conceptual models over the next five years to quantify their effectiveness. After that, he believes a societal decision will be needed on whether to integrate such a technology into healthcare.

Bioethicist Robert Truog emphasized the deeply personal nature of these decisions. He stated that his own choice would depend on his prognosis, his family's feelings, and financial consequences. "I would want my wife or another person that knew me well to be making those decisions," Truog said. "I wouldn’t want somebody to say, ‘Well, here’s what AI told us about it.'"

Ultimately, the development of AI surrogates forces a conversation about the limits of technology in the most human of moments. As Georg Starke concluded, "AI will not absolve us from making difficult ethical decisions, especially decisions concerning life and death."