A growing number of Americans are using artificial intelligence chatbots to research medical concerns, a trend that highlights a significant shift in how people access health information. Recent survey data indicates that more than one-third of the U.S. population has turned to AI for medical questions, with adoption rates even higher among younger demographics.

This development comes as the medical community itself integrates AI into professional practice to improve diagnostic accuracy and streamline workflows. However, the use of general-purpose AI by the public for health advice raises important questions about accuracy, safety, and the future of patient care.

Key Takeaways

- Over 33% of Americans have used AI chatbots for medical information, according to a recent survey.

- Nearly 50% of individuals under the age of 35 have used these tools for health-related queries.

- While medical professionals use specialized AI to aid diagnoses, public use of general chatbots carries risks of misinformation.

- Experts advise caution, emphasizing that AI should not replace consultation with a qualified healthcare provider.

A New Source for Health Information

The accessibility of advanced AI models has led to a rapid increase in their use for a wide range of tasks, including seeking medical advice. People are turning to chatbots to understand symptoms, learn about medical conditions, and explore treatment options. This behavior is particularly prevalent among younger, digitally native generations.

According to the survey findings, 34% of all Americans have used a chatbot for medical research. This figure rises to nearly 48% for those under 35, indicating a clear generational shift in information-seeking habits. The convenience and immediate availability of AI are major drivers of this trend.

Unlike scheduling a doctor's appointment, which can take days or weeks, AI chatbots provide instant responses at any time. This immediacy is appealing for individuals with pressing health questions or those seeking preliminary information before consulting a professional.

Why People Use AI for Health Queries

Several factors contribute to the public's growing reliance on AI for health information. These include the desire for privacy when researching sensitive topics, the high cost of healthcare, and difficulties in accessing medical professionals in a timely manner. For many, AI serves as a free, confidential first step in their healthcare journey.

Professional vs. Public Use of AI in Medicine

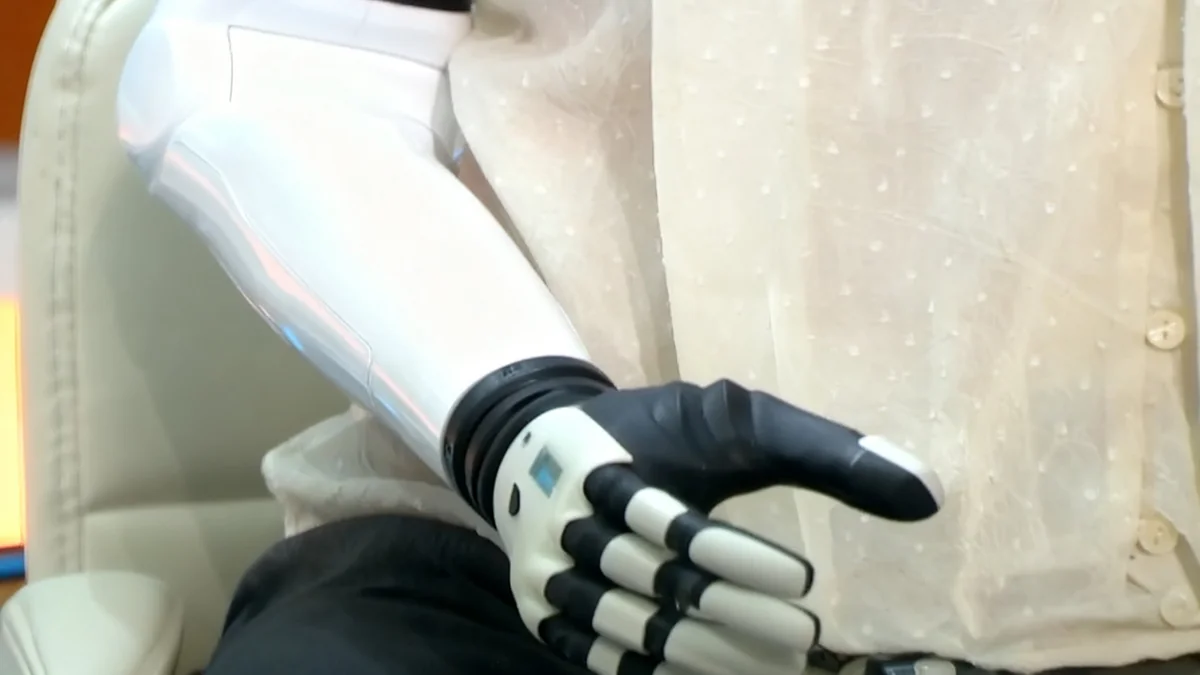

It is important to distinguish between the AI tools used by medical professionals and the general-purpose chatbots accessible to the public. In clinical settings, AI is becoming an invaluable assistant for physicians, but these systems are highly specialized and often regulated.

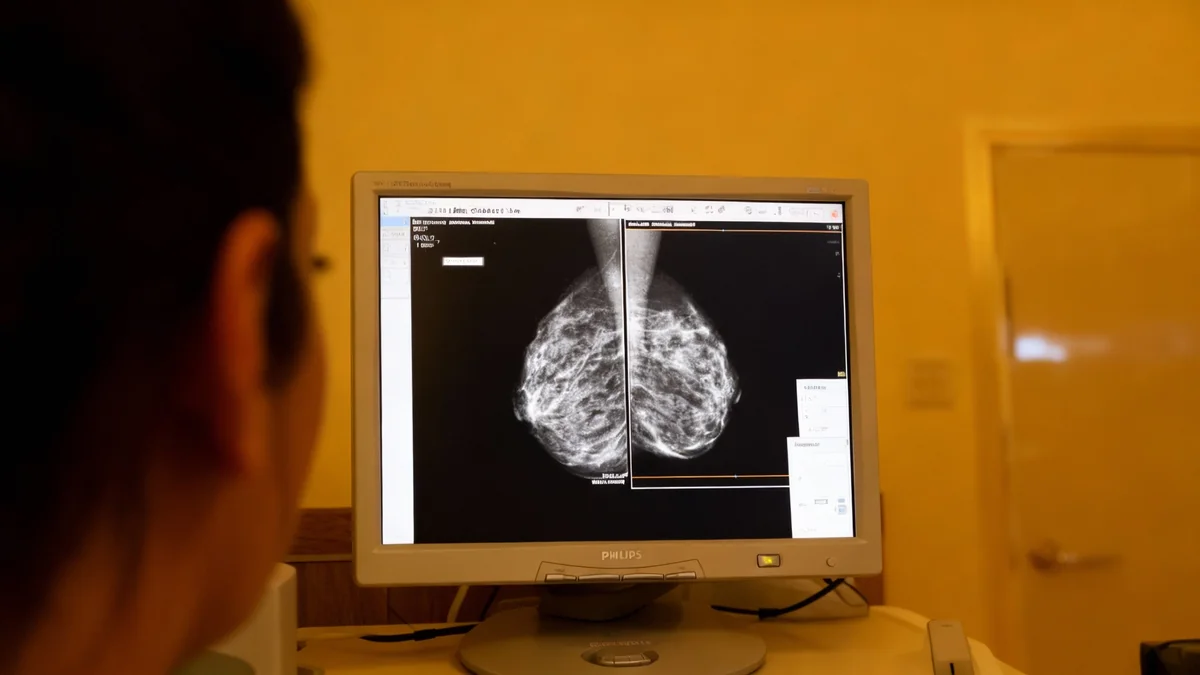

AI in the Clinic

Doctors are increasingly leveraging AI for specific tasks where the technology excels. These applications include:

- Medical Imaging Analysis: AI algorithms can analyze X-rays, MRIs, and CT scans to detect signs of diseases like cancer or stroke, often with a high degree of accuracy.

- Diagnostic Assistance: Some systems can analyze patient data, including symptoms and lab results, to suggest potential diagnoses for complex cases, helping doctors consider possibilities they might have overlooked.

- Drug Discovery: AI is used to accelerate the research and development of new medications by analyzing vast datasets to identify potential drug candidates.

These professional tools are designed for medical use and are typically validated through rigorous testing. They act as a support system for a doctor's expertise, not a replacement for it.

Fact Check: AI Diagnosis Accuracy

Studies on the diagnostic accuracy of general chatbots have produced mixed results. A 2023 study published in JAMA Internal Medicine found that ChatGPT's responses were often more empathetic than those of human doctors, but its diagnostic accuracy can be inconsistent and should not be relied upon for medical decisions.

Public-Facing Chatbots

In contrast, chatbots like ChatGPT, Gemini, or Claude are Large Language Models (LLMs) trained on vast amounts of text from the internet. They are not specifically designed as medical diagnostic tools and lack the specialized knowledge and safety protocols of clinical AI.

Their primary function is to generate human-like text based on patterns in their training data. While this data includes medical information, it also contains inaccuracies, outdated content, and non-scientific opinions, which the AI may present as fact.

The Risks of Self-Diagnosis with AI

Relying on a general AI for medical advice carries significant risks. Healthcare experts warn that these tools can provide incomplete, inaccurate, or dangerously misleading information.

"An AI chatbot cannot perform a physical exam, understand a patient's full medical history, or appreciate the nuances of their individual circumstances," explains Dr. Evelyn Reed, a public health technology analyst. "Using it as a substitute for a doctor can lead to delayed diagnosis, incorrect self-treatment, or unnecessary anxiety."

Key dangers include:

- Misinformation: The AI may generate plausible-sounding but factually incorrect information, leading to poor health decisions.

- Lack of Context: A chatbot cannot understand the unique context of a user's health, lifestyle, and genetic predispositions, which are critical for accurate medical advice.

- Escalating Anxiety: AI can sometimes provide worst-case scenarios for common symptoms, causing undue stress and fear, a phenomenon known as "cyberchondria."

- Privacy Concerns: Sharing sensitive health information with a commercial chatbot raises significant privacy issues, as the data could be stored, analyzed, or used for other purposes.

A Tool for Education Not Diagnosis

Despite the risks, experts believe AI can still play a positive role in patient education when used responsibly. Rather than asking an AI to diagnose a condition, a more effective approach is to use it as a learning tool.

For example, a person already diagnosed with a condition like diabetes can use an AI to ask for explanations of medical terms, request summaries of treatment guidelines, or get ideas for healthy recipes. In this capacity, the AI acts as a powerful search engine that can simplify complex topics.

The key is to use AI to supplement, not replace, professional medical advice. Patients should always verify information from a chatbot with their doctor and never make changes to their treatment plan without consulting a qualified healthcare provider. As AI technology continues to evolve, the development of specialized, reliable AI health assistants for the public may become a reality, but for now, caution remains the best approach.