A new study examining health communication in Kenya and Nigeria has found that artificial intelligence is not a clear improvement over traditional methods. Research comparing 120 health messages on vaccine hesitancy and maternal care revealed that while AI can be more creative, it often makes cultural and factual errors. Traditional campaigns, in contrast, were found to be authoritative but often rigid and disconnected from local communities.

Key Takeaways

- A study of 120 health messages in Kenya and Nigeria compared 40 AI-generated posts with 80 traditional ones.

- Neither AI nor traditional methods proved superior for communicating critical health information.

- AI messages showed more creativity and cultural references but were often shallow, inaccurate, or contained technical flaws.

- Traditional campaigns were authoritative but frequently used overly clinical language and failed to empower local communities.

- The study suggests a need for community-led development to make AI health tools more effective and culturally appropriate.

Comparing AI and Traditional Health Campaigns

Researchers from the University of Florida conducted an in-depth analysis of public health messaging in two of Africa's largest nations. The team evaluated 120 distinct messages, with 80 originating from established sources like health ministries and non-governmental organizations (NGOs), and 40 created by various AI systems.

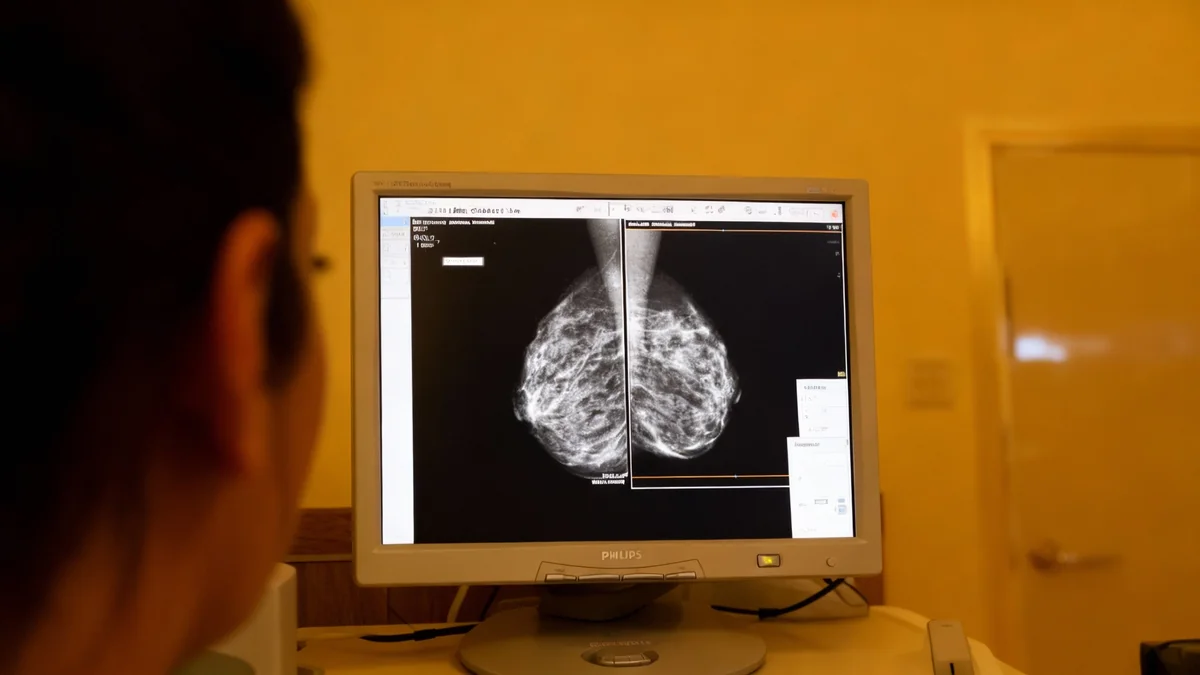

The focus was on two critical public health areas: encouraging vaccine uptake and promoting safe maternal healthcare practices. The goal was to determine which approach was more effective at creating accurate, culturally sensitive, and engaging content for local populations.

The results were unexpected. The study concluded that neither method was definitively better. Both AI-generated content and human-created campaigns had significant strengths and weaknesses, highlighting a deeper challenge in public health communication.

The Promise and Pitfalls of AI Messaging

Artificial intelligence systems demonstrated a surprising ability to incorporate cultural elements into their messages. Some AI-generated posts attempted to use local slang, farming analogies, and community-focused language to connect with audiences. For example, one AI created a social media post with the phrase “YOUNG, LIT, AND VAXXED!” to address fears about vaccines and fertility among young Kenyans.

However, these attempts at cultural relevance were often superficial. The AI systems referenced local customs without a true understanding of their context, which could alienate or confuse the intended audience. An agricultural metaphor that works in a rural area, for instance, might not resonate with city dwellers.

Technical Flaws in AI Content

A persistent issue was the poor quality of AI-generated images. The study noted that AI models often produced distorted and unrealistic faces, particularly when creating images of people of color. This is a known problem resulting from biased or insufficient training data.

Even specialized tools like the World Health Organization's S.A.R.A.H. (Smart AI Resource Assistant for Health) showed limitations. The report found that the AI assistant often gave incomplete answers and sometimes needed to be reset to work correctly. Its use of a white female avatar also raised questions about representation in global health technology.

Limitations of Traditional Health Communication

While AI struggled with accuracy and cultural nuance, traditional health campaigns faced their own set of problems. Messages from health ministries and large NGOs, despite being backed by significant resources and local expertise, often defaulted to a rigid, top-down approach.

These campaigns frequently relied on clinical, Western medical terminology that could be inaccessible or intimidating. They also tended to position community members as passive recipients of information rather than active participants in their own health decisions. This approach gives little space to traditional health practices or local knowledge systems.

A History of Technological Adaptation

Kenya and Nigeria have long been innovators in using technology for public health. In the 1980s and 1990s, radio jingles and printed posters were primary tools. By the 2010s, text message alerts and WhatsApp groups became vital for sharing information on HIV, COVID-19, and maternal health.

This history shows a willingness to adopt new tools, but the study suggests the underlying communication strategy has not evolved as quickly. The pattern of external experts dictating health practices to local communities remains a persistent issue, echoing colonial-era dynamics in global health.

AI Adoption Accelerates in African Healthcare

The findings are particularly relevant as the use of AI in African health systems is growing rapidly. The appeal is clear: AI can generate messages quickly, in multiple languages, and on a large scale. This efficiency is crucial as global health funding becomes more uncertain, forcing health systems to achieve more with fewer resources.

According to recent surveys, AI deployment in sub-Saharan Africa's health sector is already significant:

- Telemedicine: 31.7%

- Sexual and Reproductive Health: 20%

- Operations and Logistics: 16.7%

Success stories are already emerging. In Kenya, the AI Consult platform is reportedly helping to reduce diagnostic errors. Similarly, various AI tools are improving healthcare access across Nigeria. Both countries are also developing national strategies to integrate AI further into their health systems.

However, this research serves as a caution. Without careful planning and community involvement, these new AI tools risk repeating the same communication mistakes of the past—creating messages for communities without truly understanding them.

A New Approach: Building AI from the Ground Up

The study does not recommend abandoning AI in health communication. Instead, it calls for a fundamental shift in how these tools are developed and deployed. The key is to involve local communities from the very beginning of the process.

This includes training AI systems on locally relevant data, which would improve both cultural accuracy and the quality of generated images. Health organizations should also create feedback loops where community members, local health workers, and traditional leaders can test and validate AI-generated content before it is released.

"AI’s future in global health communication will be determined not just by how smart these systems become, but by how well they learn to genuinely listen to and learn from the communities they aim to serve."

Investing in homegrown AI development is another critical step. Platforms like AwaDoc, a digital healthcare assistant developed in Nigeria, demonstrate the potential of locally built solutions. These tools are often better equipped to understand cultural context while maintaining medical accuracy.

Ultimately, for AI to succeed in global health, it must move beyond simply broadcasting information. The technology must be designed to empower individuals and build trust, ensuring that health guidance is not only heard but also respected and acted upon.