Artificial intelligence chatbots are increasingly becoming a primary source of medical advice and emotional support for patients in China, particularly for those facing an overburdened healthcare system. This shift highlights both the potential benefits of AI in addressing healthcare access issues and significant concerns regarding diagnostic accuracy and patient safety.

Key Takeaways

- Chinese patients use AI chatbots like DeepSeek for daily health advice and emotional support.

- The national healthcare system faces severe inequalities and doctor shortages, especially in rural areas.

- AI models can mimic medical knowledge in exams but struggle with real-world clinical judgment.

- Nephrologists have identified significant errors in AI-generated treatment plans, raising safety concerns.

- Despite risks, patients value the accessibility, empathy, and perceived equality offered by AI doctors.

The Appeal of AI in a Strained Healthcare System

For many Chinese citizens, accessing timely and comprehensive medical care can be a challenge. Hospitals, especially top-tier facilities, are often crowded, leading to brief consultations with doctors who may see over 100 patients daily. This environment leaves many patients feeling unheard and rushed.

AI chatbots offer an alternative. They are always available, respond instantly, and provide detailed, empathetic interactions. This constant availability and supportive tone can make bots feel like trusted partners, particularly for those who feel isolated or lack consistent human care.

Fact Check

- China's top hospitals are concentrated in economically developed eastern and southern regions.

- Doctors' salaries are often low, with bonuses tied to departmental profits, potentially incentivizing procedures.

- The aging population intensifies strain on the healthcare system, leading to widespread distrust.

A Personal Experience with DeepSeek

One 57-year-old kidney transplant patient in eastern China found herself relying on DeepSeek, a leading AI chatbot, for medical guidance. Her previous experience involved a two-day commute to Hangzhou for a three-to-five-minute doctor's appointment. DeepSeek offered a different experience.

She used the app on her iPhone, asking about symptoms like high mean corpuscular hemoglobin concentration and nighttime urination. She also sought advice on diet, exercise, and medications, sometimes spending hours in virtual consultations. She uploaded ultrasound scans and lab reports, adjusting her lifestyle based on DeepSeek's interpretations. At one point, she even reduced her immunosuppressant medication intake at the bot's suggestion.

"DeepSeek is more humane," the patient stated in May. "Doctors are more like machines."

The Rise of AI in Chinese Healthcare

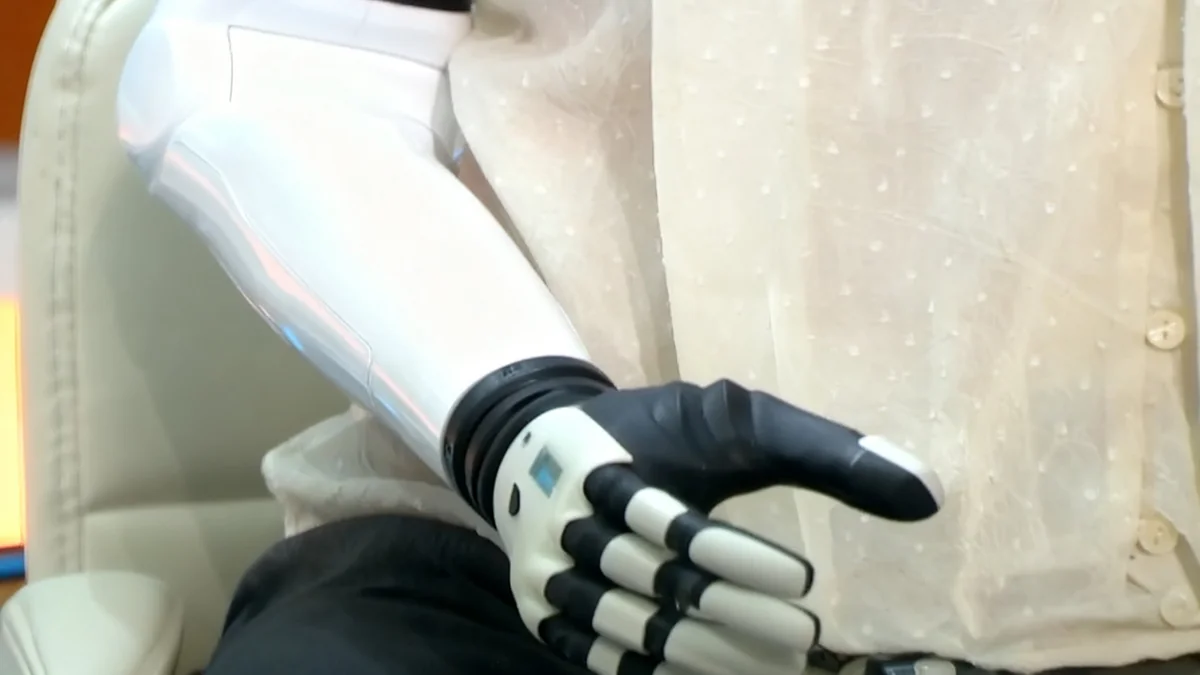

The integration of large language models (LLMs) into healthcare is not limited to individual users. Since the release of DeepSeek-R1 in January, hundreds of hospitals across China have incorporated AI into their operations. These AI-enhanced systems assist with initial patient complaints, chart writing, and suggesting diagnoses.

Major hospitals are collaborating with tech companies, using patient data to train specialized AI models. For example, a hospital in Sichuan province introduced "DeepJoint" for orthopaedics, analyzing CT and MRI scans to generate surgical plans. Another in Beijing developed "Stone Chat AI" to answer questions about urinary tract stones.

Background on China's Healthcare Disparities

China's healthcare system suffers from significant inequalities. Top medical talent and resources are concentrated in urban centers, forcing patients from smaller cities and rural areas to travel long distances for specialized care. This creates immense pressure on urban hospitals and limits access for many.

The one-child policy generation is now seeing their parents age, further straining a public senior-care infrastructure that is still developing. Many adult children live far from their elderly parents, making consistent care difficult. AI offers a constant, accessible presence for these individuals.

AI's Role Beyond Diagnosis

Beyond medical advice, AI chatbots provide emotional support and a sense of equality. Patients often report feeling rushed or even scolded by human doctors, fearing to ask too many questions. With AI, they can lead the conversation and explore their concerns without judgment.

The patient mentioned earlier reported everything from changes in kidney function to blurry vision and blood oxygen levels to DeepSeek. The bot confidently responded with bullet points, emojis, tables, and flow charts, often adding encouraging remarks like "You are not alone."

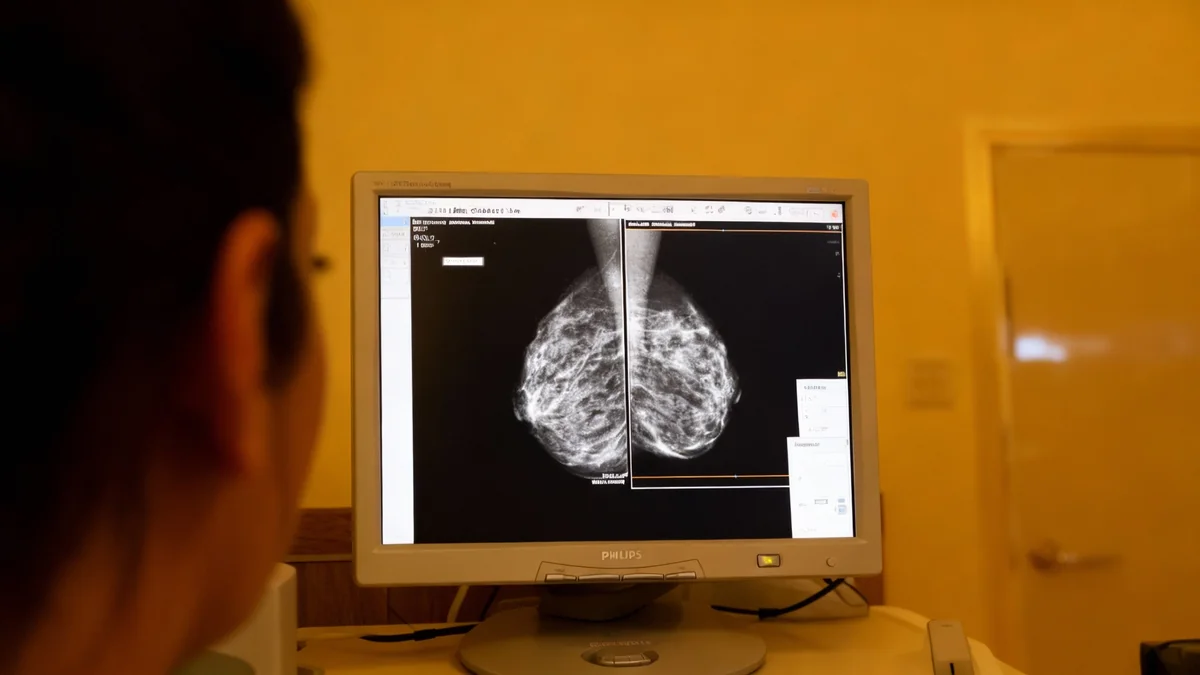

AI's Performance in Medical Tests

- A 2023 study found ChatGPT achieved a passing score equivalent to a third-year US medical student on the US Medical Licensing Examination.

- Google's Med-Gemini models reportedly performed even better on similar benchmarks last year.

- Some studies suggest chatbots can outperform physicians in diagnosing specific conditions like eye problems and emergency room cases in simulated environments.

The Critical Concerns: Accuracy and Ethics

Despite the perceived benefits and impressive test scores, the real-world application of AI in healthcare raises serious concerns. Nephrologists reviewing conversations between the patient and DeepSeek identified significant errors in the bot's advice.

One suggestion to treat anemia using erythropoietin, for instance, could increase cancer risks and other complications. Other recommended treatments for kidney function were described as unproven, potentially harmful, or "a kind of fantasy." DeepSeek also mixed up the patient's original diagnosis with a rare kidney disease and suggested incorrect blood tests.

Dr. Melanie Hoenig, an associate professor at Harvard Medical School, called some of DeepSeek's advice "sort of gibberish, frankly." She added, "For someone who does not know, it would be hard to know which parts were hallucinations and which are legitimate suggestions."

Challenges in Real-World Clinical Judgement

Researchers note that chatbots' performance on medical exams does not directly translate to real clinical practice. Exams present clear symptoms, while real patients describe problems vaguely and often lack correct medical terminology. Diagnosing requires observation, empathy, and clinical judgment that AI currently lacks.

A study in Nature Medicine found that AI models performed significantly worse in simulated patient interactions than in exams, struggling with asking questions and connecting scattered medical history or symptoms. Large language models also tend to agree with users, even when the user is incorrect, adding another layer of risk.

Ethical Considerations

- Consent: Patients may not fully understand the limitations or risks of AI advice.

- Accountability: Who is responsible if an AI provides incorrect, harmful advice?

- Bias: AI models can perpetuate or exacerbate existing healthcare disparities, potentially missing diseases in marginalized groups.

- Regulation: China has banned AI from generating prescriptions, but oversight on other advice remains limited.

The Future of AI in Healthcare

The tech industry views healthcare as a promising frontier for AI. Companies like DeepSeek are actively recruiting interns to annotate medical data to improve model accuracy and reduce hallucinations. Alibaba's healthcare-focused chatbot, trained on Qwen LLMs, has reportedly passed China's medical qualification exams across 12 disciplines.

Even popular apps like Douyin and Alipay now offer rudimentary "AI doctors" for basic advice, report interpretation, and appointment booking. While these systems are often supervised by human doctors, the goal is to improve efficiency and access.

However, experts like Lu Tang, a professor at Texas A&M University studying medical AI ethics, caution against overstating AI's ability to reduce health disparities. Models developed in major cities may not be effective for rural populations, potentially widening the gap rather than closing it.

Balancing Innovation with Patient Safety

The patient who relied on DeepSeek eventually acknowledged the bot's contradictory advice and understood its limitations. Yet, she continued to use it, valuing its constant presence and the ability to ask questions without judgment. When faced with a low white blood cell count, she consulted DeepSeek for follow-up tests before reluctantly agreeing to see her human nephrologist.

This illustrates the complex relationship emerging between patients and AI. While the convenience and emotional support are undeniable, the potential for incorrect diagnoses and harmful advice remains a critical challenge. The ongoing development of AI in healthcare demands a careful balance between innovation and rigorous oversight to ensure patient safety and equitable care.