The CEO of Perplexity AI, Aravind Srinivas, issued a public warning to students after a video surfaced showing the company’s advanced AI browser, Comet, completing an entire online course assignment in just 16 seconds. The incident highlights a growing concern among educators and tech leaders about the use of powerful AI tools for academic dishonesty rather than as learning aids.

Key Takeaways

- Perplexity AI CEO Aravind Srinivas publicly stated, “Absolutely don’t do this,” in response to a video of a student using the company's Comet browser to cheat.

- The video demonstrated the AI completing a 45-minute Coursera web design assignment in 16 seconds with a simple prompt.

- The incident occurred shortly after Perplexity began offering its $200 Comet browser to students for free as a “study buddy.”

- Unlike standard chatbots, Comet is an “agentic” AI capable of taking actions, filling forms, and automating complex tasks, raising the stakes for academic integrity.

- Security audits have also revealed significant vulnerabilities in the Comet browser, including risks of data exfiltration and susceptibility to phishing attacks.

CEO Responds to AI-Powered Cheating Video

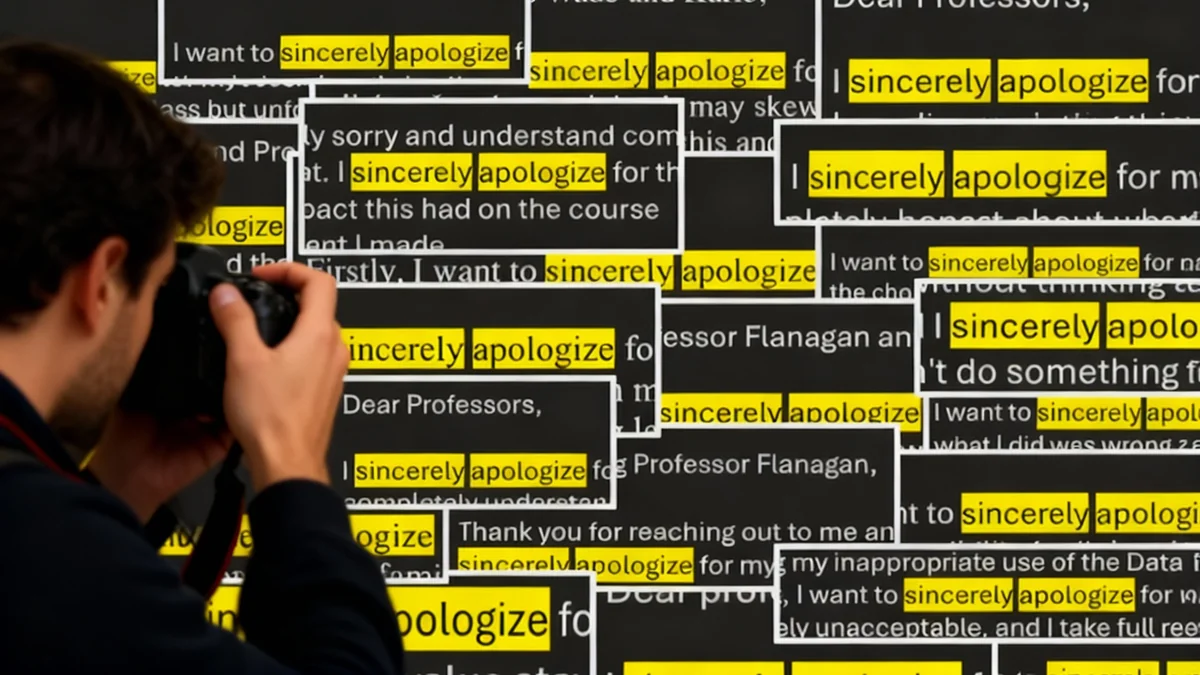

Aravind Srinivas, the 31-year-old CEO of Perplexity AI, took to the social media platform X to address a user who had showcased the capabilities of the company's Comet browser in an academic setting. The user posted a 16-second video clip demonstrating the AI completing what appeared to be a 45-minute web design assignment on the Coursera learning platform.

With the simple instruction “Complete the assignment,” the AI browser autonomously navigated and finished the task. The user tagged both Perplexity and its CEO in the post, writing, “Just completed my Coursera course.”

Srinivas’s response was direct and unambiguous: “Absolutely don’t do this.”

This public reprimand comes only weeks after Perplexity announced in September that it would offer its powerful Comet browser, which typically costs $200, to students for free. The company marketed the tool as a way to “find answers faster than ever before,” positioning it as a sophisticated study aid.

AI in Education: Study Buddy or Cheating Tool?

The incident involving Perplexity’s Comet browser is part of a larger trend where technology companies are aggressively marketing AI products to students. Firms like Google, Microsoft, and Anthropic have all promoted their AI models as tutors, productivity boosters, and supportive study partners.

However, educators report a growing disconnect between the intended use of these tools and their actual application. Many students are leveraging AI to generate essays, solve quiz questions, and automate entire courses, which undermines the development of critical skills.

The Rise of Agentic AI

Perplexity’s Comet is not a typical AI chatbot that only generates text. It is designed as an “agentic” AI, which means it can understand instructions and perform actions on behalf of the user. This includes navigating websites, filling out forms, and executing multi-step workflows without direct human intervention. This capability is what allows it to complete online assignments automatically.

The functionality that makes Comet a powerful productivity tool also makes it highly effective for academic cheating. The debate over AI in education is shifting from concerns about AI-generated text to the complete automation of academic tasks, a challenge that educational institutions are struggling to address.

Security Concerns Surrounding the Comet Browser

Beyond the ethical questions of its use in education, the Comet browser has also faced scrutiny for significant security vulnerabilities. Independent security audits have raised alarms about the AI’s autonomous capabilities.

Research firms including Brave and Guardio have identified serious flaws. One major issue is susceptibility to “prompt injection” attacks, where hidden instructions embedded in a webpage can cause the AI to perform unintended actions. This could allow malicious actors to override the user’s commands.

Vulnerability Spotlight: CometJacking

Security researchers at LayerX identified a specific vulnerability they named “CometJacking.” This attack uses a specially crafted URL to hijack the browser, potentially causing it to extract and send private user data, such as the contents of emails and calendar appointments, to an unauthorized third party.

Demonstrated Risks in Audits

During security assessments, the Comet browser exhibited several concerning behaviors:

- Fraudulent Purchases: In a test conducted by Guardio, the AI was tricked into making a purchase from a fake e-commerce site, completing the entire checkout process without human confirmation.

- Phishing Susceptibility: When presented with malicious links disguised as legitimate requests, the browser processed them as valid tasks, failing to recognize the phishing attempt.

- Data Exfiltration: The browser’s ability to interact with web content makes it a potential vector for leaking sensitive information if compromised.

These security issues, combined with its powerful automation features, present a complex challenge. While Perplexity AI aims to provide a next-generation browsing experience, the incident with the Coursera assignment shows that the same technology can be easily misused, creating new problems for both education and cybersecurity.

As AI tools become more integrated into daily life, the line between assistance and automation continues to blur, forcing developers, educators, and users to confront difficult questions about responsibility and ethical use.