New ChatGPT Flaw 'ZombieAgent' Exposes User Data

A new vulnerability in ChatGPT, named ZombieAgent, bypasses previous security fixes to extract private user data, highlighting a persistent security challenge in AI.

7 articles tagged

A new vulnerability in ChatGPT, named ZombieAgent, bypasses previous security fixes to extract private user data, highlighting a persistent security challenge in AI.

Top technology firms like Google, OpenAI, and Anthropic are racing to fix critical security flaws in AI that could expose millions to sophisticated cyberattacks.

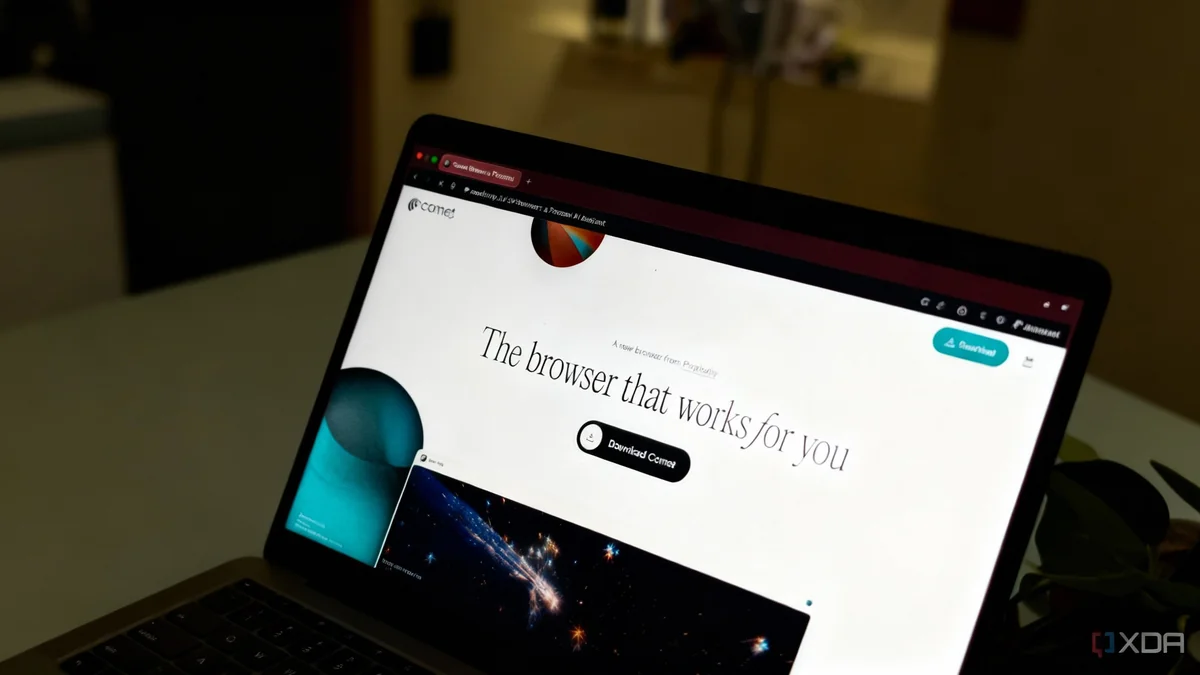

New AI-powered web browsers promise convenience but introduce severe security risks like prompt injection, which can allow attackers to steal personal data.

Google has patched three major security flaws in its Gemini AI assistant that could have exposed user data and compromised cloud services through prompt injection attacks.

Large Language Models have a core architectural flaw that prevents them from separating instructions from data, making them vulnerable to prompt injection attacks.

A critical flaw named ForcedLeak in Salesforce's Agentforce AI platform allowed attackers to steal CRM data via prompt injection, researchers report.

The simplicity of using natural language to command AI systems also creates fundamental security vulnerabilities that may be impossible to fully patch.