The nature of warfare is undergoing a rapid and profound transformation, driven by advancements in artificial intelligence, synthetic biology, and quantum computing. From remotely controlled drones in Ukraine to sophisticated AI targeting systems, new technologies are reshaping how conflicts are fought and how nations prepare for future threats. This shift demands immediate attention from global leaders to adapt strategies and consider the ethical implications of these emerging capabilities.

Key Takeaways

- AI and autonomous weapons are rapidly changing global military strategies.

- Small, inexpensive drones are proving highly effective on modern battlefields.

- Major powers like the US and China are investing heavily in AI-driven military tech.

- Concerns about ethical use, civilian casualties, and bioweapon development are growing.

- International treaties and public-private collaboration are crucial for managing these new risks.

The New Era of Battlefield Dominance

In current conflicts, such as in Ukraine, small and affordable remotely controlled weapons have demonstrated significant effectiveness. These drones, often built with readily available commercial parts, offer a cost-efficient yet powerful solution for reconnaissance and targeted strikes. This trend highlights a shift away from exclusively relying on expensive, complex military hardware.

Nations around the world are observing these developments. China, for instance, is actively testing drone swarms designed to operate in unison. These swarms could autonomously identify and engage targets, potentially without direct human input. This represents a substantial leap in military capability, raising questions about control and accountability.

Emerging Warfare Technologies

- Autonomous Drone Swarms: Groups of robotic aircraft working together to find and eliminate targets.

- Advanced Cyberweapons: Tools capable of disabling military forces and critical infrastructure.

- AI-Designed Bioweapons: Engineered pathogens targeting specific genetic characteristics.

Global Race for Technological Superiority

Human history often reflects advancements in warfare. From ancient chariots to nuclear missiles, each era introduced new forms of combat. Today, the pace of weapons development is faster than ever before. Experts point to several emerging technologies that could redefine conflict globally.

The United States currently holds a lead in some areas, particularly in AI, largely due to significant investments from its private sector. However, countries like China and Russia are accelerating their state-backed investments. They are integrating these innovations into their militaries through purpose-built universities and strategic deployment.

"The speed of warfare will soon outpace human ability to control it," stated Andrew C. Weber, a former Pentagon official overseeing nuclear, chemical, and biological defense programs.

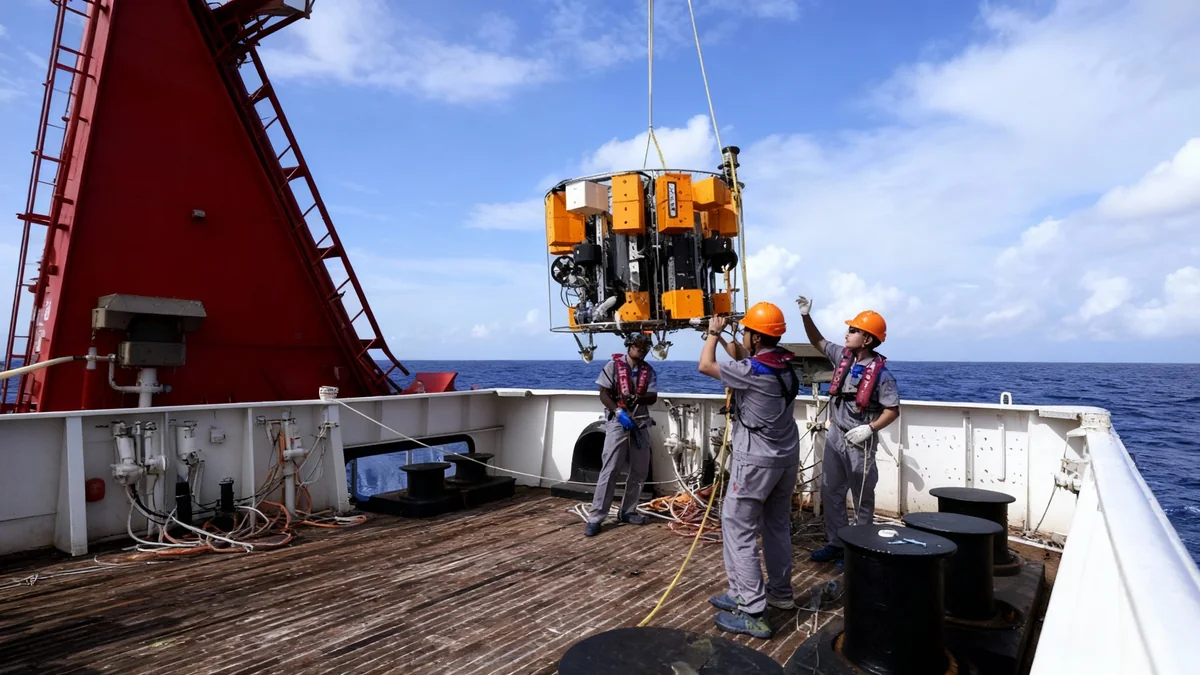

China's Maritime Drone Innovations

Chinese technology companies have already developed and deployed a diverse range of maritime drones. These include surface vessels, autonomous submarines, and research drones, indicating a broad strategic focus on unmanned systems across different domains.

To maintain pace in this 21st-century arms race, strong political will and national coordination are essential. This coordination must bridge the gap between public and private sectors, along with research institutions. The Pentagon needs to adapt its recruitment, training, and strategies to fully embrace these technological changes.

Lessons from History

During World War II, American science overcame an initial disadvantage against Germany, ultimately contributing to victory through breakthroughs like the atomic weapon. This success relied heavily on collaboration between government, academia, and private companies. Today, a similar collaborative effort is needed, especially for AI, which largely originated from the private sector.

The Pentagon's AI Initiative: Project Maven

America's national security establishment is actively adapting to this evolving landscape. The National Geospatial-Intelligence Agency (NGA) is at the forefront of developing the military's AI targeting system, known as Project Maven. This program processes vast amounts of data from satellites, spy planes, and other sensors.

Maven's imagery analysis program identifies objects much faster than human analysts. It is now deployed in every major U.S. military command headquarters globally. The system has provided targeting suggestions in Iraq, Syria, and Yemen, leading to successful strikes. It has also supplied intelligence used by Ukraine against Russian targets.

The program can identify military assets such as rocket launchers, troop formations, and battleships. Crucially, it also tags objects on no-strike lists, including hospitals, schools, and religious buildings. The NGA has informed Congress that these algorithms become faster and more reliable as they are fed new intelligence data daily.

Private Sector Collaboration

This software-driven approach requires significant private sector involvement. Palantir, a data analytics company, is a key contractor. It integrates intelligence data from agencies like the NGA and presents it through a program called Maven Smart System, used by military intelligence officers worldwide on classified networks.

Another startup, Anduril, recently secured an Army contract for an AI-powered drone defense program. Founded by Oculus inventor Palmer Luckey, Anduril's software streamlines information from radar and other detection systems. This allows humans to target and destroy enemy drones more quickly and effectively.

In a recent test over the Mojave Desert, Anduril flew a dart-shaped drone called Fury, marking its first AI-controlled flight. The Pentagon aims to deploy a fleet of 1,000 robotic wingmen. These autonomous aircraft would fly alongside traditional fighter jets, performing dogfights, reconnaissance, and electronic warfare.

Adversaries' Advances and Ethical Challenges

America's rivals are also making significant strides. China has released footage of what appears to be a robotic wingman undergoing flight tests. Ukrainian forces have captured a Russian drone, the V2U, capable of flying dozens of miles, identifying targets, and delivering explosives autonomously. This drone, built with commercial parts, costs significantly less than its American or Chinese counterparts.

For decades, the United States has favored complex, bespoke weapons systems that take years to develop. The F-35 Lightning II fighter plane, for example, boasts advanced capabilities, but its intricate design often leads to extensive maintenance, keeping it grounded more than in the air. This reliance on expensive, delicate systems creates vulnerabilities, especially if adversaries disrupt space-based communication and tracking networks. According to former Deputy Defense Secretary Bob Work, China's military strategy aims to break apart American battle networks using cyberweapons and jamming.

The Ethical Minefield of AI Warfare

The rise of autonomous weapons presents significant ethical dilemmas. Entrusting decision-making to robots can increase the risk to civilians. Israel's use of AI-enabled surveillance systems in Gaza, for instance, has generated controversy, with reports of misidentifying civilians as combatants, leading to tragic outcomes.

The dangers extend beyond conventional battlefields. Experts warn that AI advancements could usher in a new era of bioterrorism. With basic coding skills, a laptop, and internet access, individuals could direct AI programs to scour databases for ways to enhance viruses, making them more contagious or lethal. Specific genetic groups or even animal species could become targets.

This year, major AI companies like OpenAI and Anthropic issued warnings about AI's potential to aid bad actors in creating bioweapons. Students at MIT, using chatbots, reportedly identified four pandemic pathogens, learned how to generate them from synthetic DNA, found companies unlikely to screen DNA orders, and even suggested research organizations for assistance—all within an hour.

The Path Forward: Regulation and Collaboration

The world is largely unprepared for these rapid technological shifts. Deterrence alone may not prevent the catastrophic use of new weapons. The United States must engage in international efforts to negotiate and sign treaties that limit the deployment and use of autonomous weapons systems.

The United Nations secretary-general and the International Committee of the Red Cross advocate for a new treaty on autonomous weapons systems by 2026. Such a treaty should include limits on target types, prohibiting use where civilians are present. It should also mandate effective human supervision, timely intervention, and deactivation for human-machine interactions.

Additionally, the administration should push for comprehensive requirements for companies selling equipment used to create biological agents. These companies must verify customer identities and the nature of their work. The 50-year-old Biological Weapons Convention, with nearly 190 participating states, also needs amendments to address current technological advancements.

America must also reverse its approach to universities, returning to long-term, open-ended investment in future technologies through research and development grants. Expanding investments in nascent private industry technologies, such as autonomous drones, is also crucial. Finally, tighter export controls on advanced AI chips are necessary to prevent them from reaching adversarial nations.

From the battlefields of Ukraine to the sophisticated security measures seen in high-level diplomatic meetings, it is clear that geographical barriers no longer offer sufficient protection against adversaries employing AI as part of a comprehensive strategy. America must simultaneously lead in building autonomous weapons and in establishing controls for their safe and ethical use.