Qualcomm has officially announced its entry into the competitive artificial intelligence accelerator market, a move that positions the company to compete directly with established leaders Nvidia and AMD. The San Diego-based technology firm revealed a new line of AI-focused hardware designed for data centers, signaling a significant strategic expansion beyond its core mobile chip business.

The company detailed plans for its Qualcomm A1200 and AI250 accelerator cards, which are built on its proprietary neural processing unit technology. This initiative aims to capture a share of a rapidly growing market driven by the intense computational demands of generative AI models.

Key Takeaways

- Qualcomm is launching new AI accelerator cards, the A1200 and AI250, to compete in the data center market.

- The move challenges the dominance of Nvidia and AMD in the high-demand AI hardware sector.

- The company has already secured a significant customer, Saudi AI firm Humain, for its new technology.

- This expansion is part of Qualcomm's broader strategy to diversify its revenue streams beyond the mobile handset market.

A New Contender in the AI Arena

Qualcomm's strategic entry into the AI infrastructure space is marked by a clear product roadmap. The company has committed to an annual release schedule, a pace set by its primary competitors. The first product, the Qualcomm A1200, is slated for release in 2026.

Following the A1200, the AI250 is scheduled to become available in 2027. Qualcomm also indicated that a third accelerator is already planned for 2028, reinforcing its long-term commitment to this new market segment.

Technical Specifications and Goals

The A1200 accelerator card is designed to support 768 GB of LPDDR memory. According to the company, this configuration is intended to provide higher memory capacity at a lower cost, addressing a key challenge in large-scale AI deployment.

For the AI250, Qualcomm is planning a more advanced memory architecture based on near-memory computing. The company projects this design will deliver a tenfold increase in effective memory bandwidth while simultaneously reducing power consumption, a critical factor for modern data centers.

Durga Malladi, an executive at Qualcomm, stated that the new solutions aim to redefine rack-scale AI inference. He emphasized that the goal is to allow customers to deploy generative AI with an unprecedented total cost of ownership while ensuring the flexibility and security required by data centers.

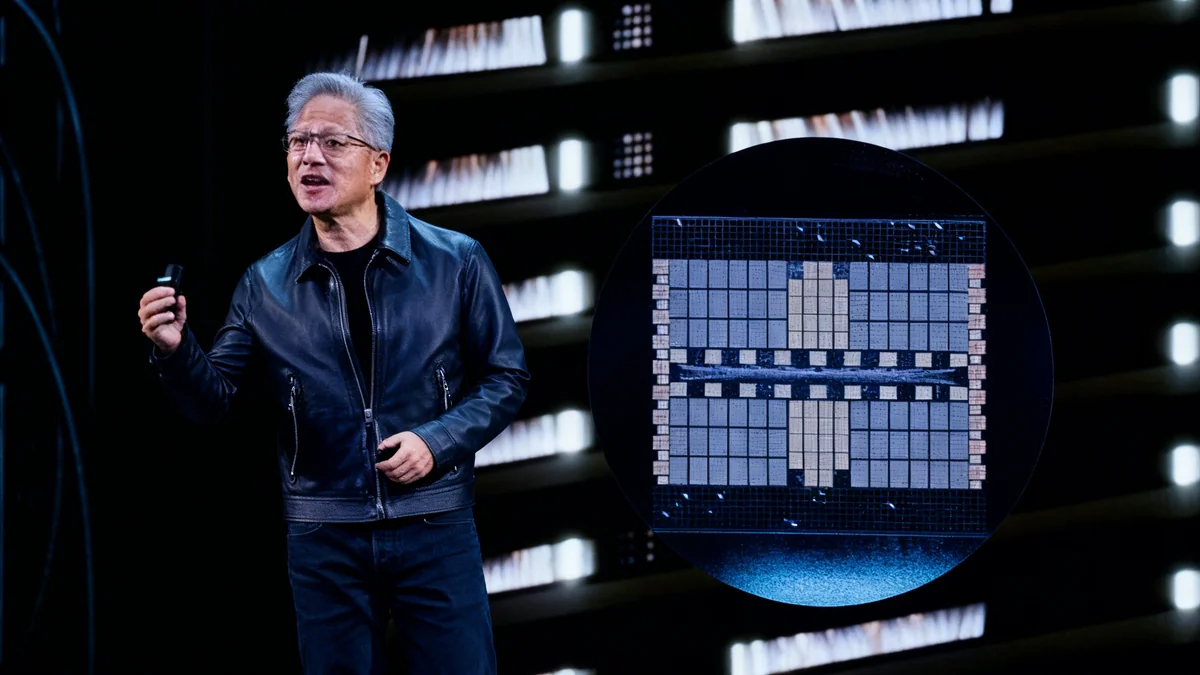

Market Landscape and Competitive Pressures

Qualcomm is stepping into a market estimated to be worth over $500 billion, according to an estimate from AMD's CEO, Lisa Su. The field is currently dominated by Nvidia, which has established a commanding lead in AI hardware.

By the Numbers: The AI Chip Market

- Nvidia's Dominance: In its most recent quarter, Nvidia reported data center revenue of $41.1 billion, a 56% increase year-over-year. This segment accounts for nearly 88% of its total quarterly revenue of $46.7 billion.

- AMD's Position: AMD's data center segment generated $3.2 billion in its latest quarter, out of a total revenue of $7.685 billion.

- Qualcomm's Starting Point: While a leader in mobile, Qualcomm's data center presence is nascent, presenting both a challenge and a significant growth opportunity.

While Nvidia holds the majority market share, both AMD and other tech giants are actively developing competing solutions. Qualcomm's entry adds another major player to the race, potentially increasing competition and providing customers with more options for building AI infrastructure.

Strategic Diversification and Early Wins

This move is a cornerstone of Qualcomm's strategy to diversify its business. The company has historically relied heavily on its handset chip division, which generated $6.33 billion of its $8.99 billion in total semiconductor revenue in the last reported quarter.

However, Qualcomm has been making steady progress in other areas. Its automotive revenue grew 21% year-over-year to $984 million, and its Internet of Things (IoT) division saw a 24% increase in sales, reaching $1.68 billion.

Beyond Mobile Phones

For years, Qualcomm has been synonymous with the processors inside smartphones. This expansion into data center AI represents the company's most ambitious effort yet to leverage its chip design expertise in new, high-growth markets. Success in this area could fundamentally reshape the company's financial profile and reduce its dependence on the cyclical mobile phone industry.

Even before the official launch, Qualcomm has secured a major customer for its new accelerators. The Saudi artificial intelligence company Humain announced it will use Qualcomm's hardware, with plans to deploy 200 megawatts of computing power starting in 2026. This early adoption provides a crucial vote of confidence in Qualcomm's new technology.

Details regarding the cost of the new accelerators and which foundry partner—such as existing partners Samsung or Taiwan Semiconductor (TSM)—will manufacture the chips have not yet been disclosed by the company. The tech community will be watching closely as Qualcomm prepares to report its fiscal fourth-quarter results on November 5.