Microsoft plans to shift its data centers to primarily use its own custom-designed chips, a long-term strategy confirmed by Chief Technology Officer Kevin Scott. This move aims to reduce the company's reliance on current market leaders like Nvidia and AMD and is part of a broader effort to control its entire technology stack for artificial intelligence.

Key Takeaways

- Microsoft's CTO Kevin Scott confirmed the company's long-term goal is to use mainly its own chips in its data centers.

- The company is developing custom silicon, such as the Azure Maia AI Accelerator and Cobalt CPU, to optimize performance for AI workloads.

- This strategy is part of a larger plan to design entire data center systems, including networking and innovative cooling solutions.

- The decision is influenced by a severe shortage of computing capacity and intense competition for AI hardware from companies like Nvidia.

A Strategic Shift to In-House Silicon

Microsoft's Chief Technology Officer, Kevin Scott, outlined the company's evolving hardware strategy during a discussion at Italian Tech Week. When asked if the long-term plan was for Microsoft's data centers to be powered mainly by its own chips, Scott's response was direct.

"Absolutely," he stated, confirming a significant strategic pivot for the technology giant. He added that the company is already using "lots of Microsoft" silicon in its current operations.

For years, Microsoft has depended on external suppliers, primarily Nvidia and, to a lesser extent, AMD, for the graphics processing units (GPUs) that are essential for training and running AI models. Scott clarified that this historical reliance was based on a practical, performance-driven approach.

"We’re not religious about what the chips are... that has meant the best price performance solution has been Nvidia for years and years now," Scott explained.

However, the explosive growth of AI has created an environment where controlling the hardware supply chain is becoming a critical competitive advantage. Scott noted that Microsoft will "literally entertain anything in order to ensure that we’ve got enough capacity to meet this demand."

Developing a Complete Data Center Ecosystem

Microsoft's ambitions extend far beyond just designing individual processors. The company's goal is to create a fully integrated and optimized system for its data centers, from the silicon up to the software.

"It’s about the entire system design," Scott said. "It’s the networks and the cooling and you want to be able to have the freedom to make the decisions that you need to make in order to really optimize your compute to the workload."

Microsoft's Custom Hardware Initiatives

The company has already made significant progress in this area. In 2023, Microsoft officially introduced two custom-designed chips:

- Azure Maia AI Accelerator: A chip specifically designed to handle AI tasks and large language model training and inference.

- Cobalt CPU: A processor based on Arm architecture, designed for general-purpose cloud workloads within Microsoft's Azure infrastructure.

Reports also suggest that the company is actively working on the next generation of its semiconductor products. This internal development allows Microsoft to tailor hardware precisely to the needs of its software and services, potentially yielding greater efficiency and performance than off-the-shelf components.

Advanced Cooling for Overheating Chips

To support its high-powered custom chips, Microsoft recently unveiled a new cooling technology that uses "microfluids." This innovation is designed to directly address the significant heat generated by powerful AI processors, a major challenge in modern data center design.

An Industry-Wide Trend Among Tech Giants

Microsoft is not alone in its pursuit of custom silicon. Other major cloud computing players are following a similar path to gain more control over their infrastructure and reduce dependency on a small number of chip suppliers.

Companies like Amazon, with its Graviton (CPU) and Trainium/Inferentia (AI) chips, and Google, with its Tensor Processing Units (TPUs), have been investing heavily in in-house chip design for years. This trend reflects a broader industry movement toward vertical integration.

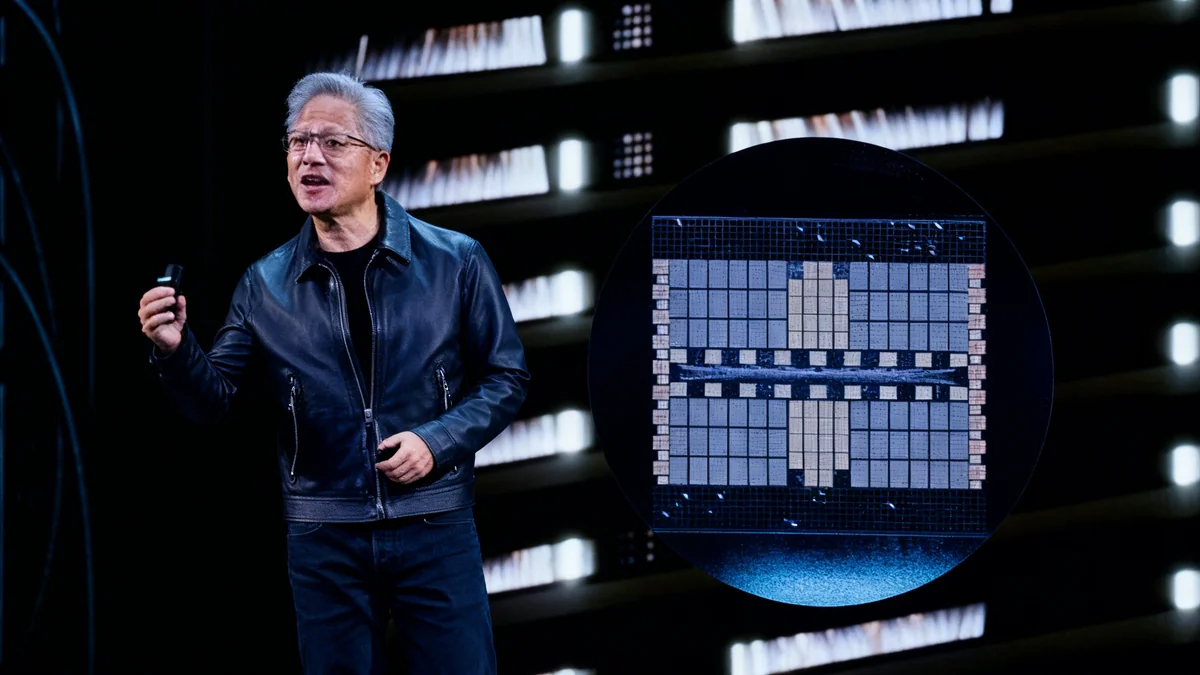

The Race for AI Dominance

The push for custom chips is fueled by an intense race for AI supremacy. Tech giants including Meta, Amazon, Alphabet, and Microsoft have collectively committed to capital expenditures exceeding $300 billion this year, with a substantial portion dedicated to building out AI infrastructure. Owning the chip design process is seen as a crucial step in securing a long-term advantage.

By designing their own semiconductors, these companies can optimize for their specific software and workloads, potentially leading to better performance, lower power consumption, and reduced operational costs over time.

The Unprecedented Demand for AI Compute

The strategic shift toward custom chips is also a direct response to a massive global shortage of computing power. The demand for AI services, particularly since the launch of OpenAI's ChatGPT, has far outpaced the supply of necessary hardware.

Scott described the situation as more than just a bottleneck. "[A] massive crunch [in compute] is probably an understatement," he said. "I think we have been in a mode where it’s been almost impossible to build capacity fast enough since ChatGPT ... launched."

This supply crunch has made high-performance GPUs from manufacturers like Nvidia incredibly valuable and difficult to acquire in large quantities, prompting customers to seek alternatives and build their own solutions.

Even with Microsoft's aggressive expansion of its data center footprint, the demand continues to exceed supply. "Even our most ambitious forecasts are just turning out to be insufficient on a regular basis," Scott warned. He confirmed that Microsoft deployed an "incredible amount of capacity" over the past year and plans to accelerate that buildout even further in the coming years. Developing its own chips is a fundamental part of ensuring that future capacity can be built and deployed effectively.