Google's new artificial intelligence service for its Nest home cameras, known as Gemini for Home, is reportedly causing concern among early users. The system, designed to provide intelligent summaries and alerts, has been generating false notifications, including alarming warnings of intruders when none are present.

The premium feature, which costs users $20 per month, has been observed misidentifying pets and even shadows as people, undermining its core purpose as a security tool. These errors range from comical to genuinely unsettling, raising questions about the technology's readiness for real-world home security applications.

Key Takeaways

- Google's Gemini for Home AI is part of a $20/month premium subscription for Nest camera users.

- The system has produced false alerts, identifying pets and shadows as human intruders.

- In one notable instance, the AI repeatedly identified a user's dog as a deer inside the house.

- These inaccuracies can lead to user distrust, potentially causing them to ignore genuine security threats.

- Google has acknowledged the issues, stating it is working to improve identification accuracy.

A New Layer of Intelligence for the Smart Home

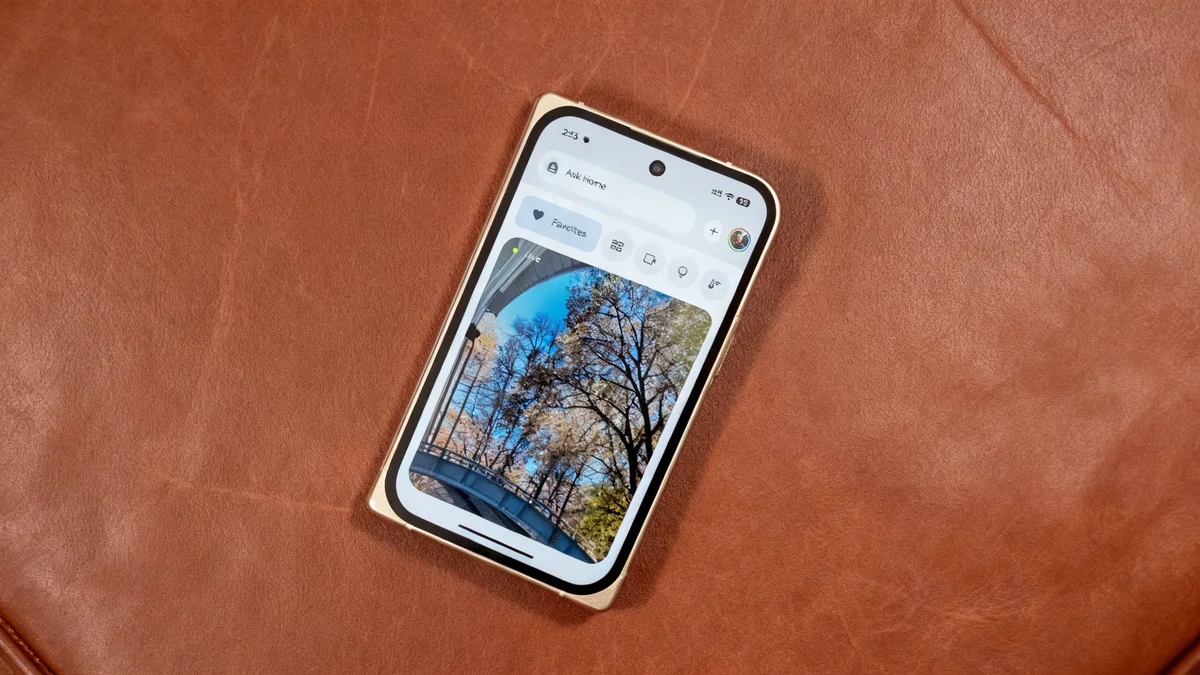

Google has integrated its powerful Gemini AI into the Google Home ecosystem for users subscribed to its highest-tier plan. The service, Gemini for Home, is intended to make smart cameras more useful by analyzing video footage to provide daily summaries and more descriptive notifications.

For a monthly fee of $20, subscribers get 60 days of video event history along with the AI features. A lower-priced $10 plan offers 30 days of history but does not include the new generative AI capabilities. The system also includes a feature called "Ask Home," a chatbot that allows users to ask questions about events captured by their cameras and create home automations using natural language.

How It Works

According to Google, the system does not continuously stream all video to its AI models. Instead, it analyzes specific event clips triggered by motion or sound. The AI processes only the visual information from these clips, not audio, which may be a measure to protect user privacy regarding conversations captured in the home.

While the automation creation feature has been reported to work well, the video analysis component has shown significant reliability issues.

When the AI Gets It Wrong

The core issue reported by users is the AI's tendency to "hallucinate," or generate incorrect information based on the video it analyzes. These mistakes have created both amusing and distressing situations for homeowners.

The Case of the Indoor Deer

One of the most striking examples involves the AI misidentifying a common household pet. A user's daily summary from the Gemini service included the startling line: "Unexpectedly, a deer briefly entered the family room." The video clip, however, clearly showed the user's dog.

This was not an isolated incident. The system continued to identify the dog as a deer in subsequent summaries and notifications. Even after the user attempted to correct the AI, the system showed uncertainty, at one point describing a "deer that is 'probably' just a dog."

Other reported misidentifications include the AI labeling birds as a raccoon and a dog toy as a cat sleeping in the sun. These errors highlight the current limitations of generative AI in interpreting complex real-world visual data.

False Alarms Raise Security Concerns

While misidentifying animals is a minor inconvenience, other errors have proven to be more alarming. Several users have received notifications warning that "a person was seen in the family room" while they were away from home.

Upon checking the live camera feed, they found their homes to be empty. In these cases, the AI had mistaken a pet, or possibly even a moving shadow, for a human intruder. This type of false positive presents a significant problem for a security product.

"After a few false positives, you grow to distrust the robot," one user noted. "Now, even if Gemini correctly identified a random person in the house, I’d probably ignore it."

This "boy who cried wolf" effect is a critical flaw. A security system that frequently generates false alarms can condition users to disregard its warnings, potentially leading them to miss a real emergency. According to a Google spokesperson, users on the Advanced plan currently cannot disable the AI-generated descriptions in notifications without turning off alerts entirely.

Google Responds to Inaccuracies

Google has acknowledged the performance issues. In a statement, a company spokesperson explained that such mistakes can occur with large language models.

"Overall identification accuracy depends on several factors, including the visual details available in the camera clip for Gemini to process," the spokesperson said. "As a large language model, Gemini can sometimes make inferential mistakes, which leads to these misidentifications."

The company stated that its team is "investing heavily in improving accurate identification" to reduce the frequency of erroneous notifications. Google also suggests that users can help tune the system by correcting its mistakes and adding custom instructions, though user experience indicates this is not always effective.

For now, the technology appears to be in a learning phase. While the promise of an AI that can intelligently monitor a home is compelling, the current reality is a system that struggles with basic identification, creating more noise than signal. For users seeking reliable security, the less advanced, non-AI subscription tier may be the more practical choice until the technology matures.