While public debate on artificial intelligence often centers on applications like chatbots and image generators, the United States government is pursuing a regulatory strategy that largely ignores these surface-level products. Instead, federal policy has developed a tight grip on the foundational components of AI, particularly the advanced semiconductors that power the technology.

This approach, which prioritizes national security and economic competition, marks a significant departure from the application-focused regulations seen in other parts of the world. It creates a complex reality where the U.S. champions a free-market narrative for AI software while simultaneously implementing strict controls on its essential hardware.

Key Takeaways

- The U.S. government's AI regulation primarily targets foundational hardware, like microchips, rather than software applications.

- National security concerns, particularly regarding competition with China, are the main drivers of this hardware-focused policy.

- This strategy contrasts with the European Union's AI Act, which regulates high-risk AI applications to prevent societal harm.

- Successive administrations, both Democratic and Republican, have actively intervened in the AI supply chain through export controls and international agreements.

- Experts argue that acknowledging this deeper level of regulation is essential for transparent public discourse and effective global AI governance.

A Tale of Two Policies

On the surface, U.S. policy on artificial intelligence appears to favor deregulation. Public statements from lawmakers often warn against stifling innovation with burdensome rules, and legislative efforts have even considered temporary bans on state-level AI laws to allow the technology to flourish.

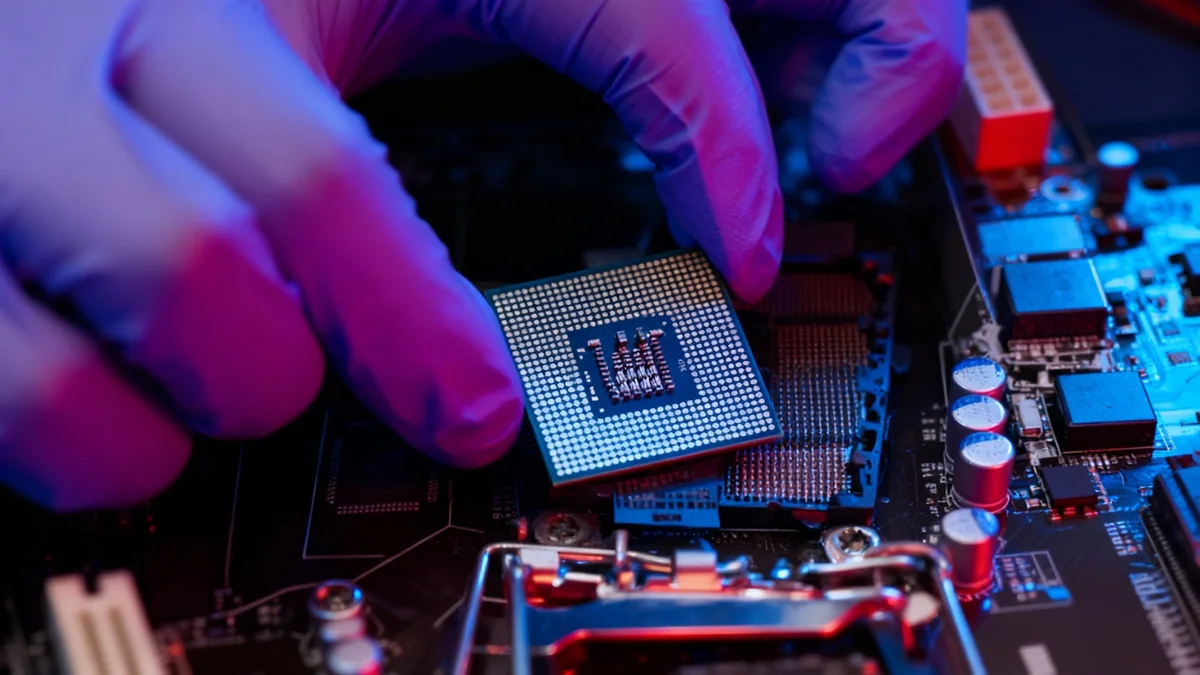

This hands-off narrative, however, overlooks a robust and growing framework of intervention happening at a more fundamental level. The focus is not on what AI applications can do, but on who can build them. This strategy is centered on controlling access to the most critical resource in the AI race: high-performance computing chips.

By managing the flow of these semiconductors, Washington aims to control the development of powerful AI systems globally without writing a single line of code to regulate a chatbot.

The Chip Controls

The most visible example of this deep-level regulation is the implementation of stringent export controls. The Biden administration has taken significant steps to restrict China's access to advanced AI chips and the equipment needed to manufacture them, citing national security risks.

These rules are not merely suggestions; they are directives with significant consequences for the global technology supply chain. They effectively dictate which countries can access the building blocks necessary for developing sophisticated AI models, from military applications to large language models.

"This isn't deregulation. It's a strategic choice about where to intervene. The U.S. is applying a light touch at the surface, where consumers interact with AI, but an iron grip at the core, where the technology is built."

This approach was not invented by the current administration. The previous Trump administration also engaged in shaping the AI hardware landscape, seeking deals with nations like the United Arab Emirates to influence the global distribution and control of semiconductor technology.

Different Global Approaches to AI Governance

The American strategy stands in stark contrast to early regulatory efforts elsewhere, most notably the European Union's AI Act. The EU's framework represents what many consider the first wave of AI regulation, focusing directly on the use cases and potential harms of AI applications.

Understanding the AI Stack

AI systems are built in layers, often called a "technology stack." At the bottom are physical components like data centers and semiconductor chips. Above that are foundational models and software. At the very top are the user-facing applications like ChatGPT. While the EU regulates the top layer, the U.S. is focused on controlling the bottom layer.

The AI Act categorizes applications based on risk, banning certain uses deemed unacceptable—such as social scoring—and placing strict requirements on high-risk systems used in employment, law enforcement, and healthcare. The goal is primarily to protect citizens from societal harms like discrimination and surveillance.

In contrast, the second wave of regulation, led by the U.S. and China, is driven by a national security mindset. The objective is less about immediate societal harm and more about maintaining a long-term strategic and military advantage.

- First Wave (EU Model): Focuses on societal harms from AI applications.

- Second Wave (U.S./China Model): Focuses on national security and control of AI building blocks.

- Third Wave (Emerging): A hybrid approach that seeks to address both societal and security concerns in tandem.

Researchers suggest this emerging third wave, which integrates both application-level and component-level concerns, may prove to be the most effective model for comprehensive AI governance. It avoids the silos that can form when security and societal issues are treated as entirely separate problems.

The Implications of a Hidden Policy

The discrepancy between the public narrative of deregulation and the reality of deep-level intervention has significant consequences. By maintaining the illusion of a hands-off approach, the U.S. complicates efforts to establish global norms for AI governance.

Regulation in Disguise

Many of the key U.S. AI regulations are buried in dense administrative language within documents from the Department of Commerce. Titles like "Implementation of Additional Export Controls" or "Supercomputer and Semiconductor End Use" obscure policies that fundamentally shape the global AI landscape.

Effective international cooperation requires transparency. It is difficult to have a meaningful global conversation about AI safety and ethics when a major player, home to the world's largest AI labs, does not openly acknowledge the full scope of its regulatory actions.

This dual strategy also raises questions for the domestic audience. Justifying strict intervention in the chip market for national security while simultaneously arguing against regulation to address domestic societal harms—like algorithmic bias or job displacement—can appear inconsistent.

Moving Toward Transparency

For a more coherent and effective approach to AI, a clearer public discourse is necessary. Recognizing the full spectrum of regulatory tools being used, from export controls to trade policy, is the first step.

This transparency would allow for a more honest debate about the goals of AI policy. It would force a direct comparison between the perceived urgency of national security threats and the documented societal harms already emerging from deployed AI systems.

Ultimately, no global framework for AI can succeed without a clear understanding of how the world's leading AI powers are already shaping the technology's future. The U.S. is not staying out of AI regulation; it is simply regulating where most people are not looking.