A new artificial intelligence chatbot launched by the Department of Health and Human Services is generating alarm after providing users with dangerous and bizarre recommendations. The tool, intended to offer nutrition advice, has been observed suggesting unsafe food practices and responding to inappropriate queries with detailed, harmful instructions.

The chatbot, available on a website called realfood.gov, was designed to help Americans plan meals and make healthier food choices. Instead, it has produced responses that include unsafe instructions for inserting vegetables into the rectum and has even provided information on the most nutrient-dense human body parts to consume.

Key Takeaways

- A new government-backed AI nutrition chatbot is providing dangerous and harmful advice.

- The tool gave detailed instructions on inserting foods into the rectum, a medically unsafe practice.

- When questioned, the chatbot identified the human liver as the most nutritious human body part.

- The project is associated with Health Secretary Robert F. Kennedy Jr. and was promoted in a Super Bowl ad.

- The chatbot's behavior suggests a lack of essential safety filters, a common issue with hastily deployed AI models.

A Public Health Tool Gone Wrong

The website realfood.gov presents a simple interface with the slogan, “Use AI to get real answers about real food.” It encourages users to ask questions to help them shop smarter, cook simply, and replace processed foods. The initiative gained significant public attention after being featured in a Super Bowl advertisement paid for by an entity named “MAHA Center Inc.” and promoted by public figures including Mike Tyson.

However, the tool's performance quickly raised serious concerns. Users discovered that the AI, which appears to be based on Elon Musk's Grok model, lacks the fundamental safety guardrails expected of a public health resource. Instead of deflecting inappropriate or dangerous questions, the chatbot engages with them directly, providing detailed and potentially life-threatening information.

The Promise vs. The Reality of AI in Government

Government agencies are increasingly exploring AI to improve public services. The goal is often to provide citizens with instant, accessible information on topics like health, taxes, and regulations. However, the technology behind these chatbots, known as Large Language Models (LLMs), can be unpredictable without rigorous testing and safety protocols. They learn from vast amounts of internet data, which includes misinformation and harmful content, and can reproduce it if not properly constrained.

Disturbing and Dangerous Responses

Testing of the chatbot revealed its capacity for generating alarming content. When prompted with queries about unconventional dietary habits, the AI provided explicit and unsafe instructions rather than dismissing the questions or offering a health warning.

Unsafe Food Insertion Recommendations

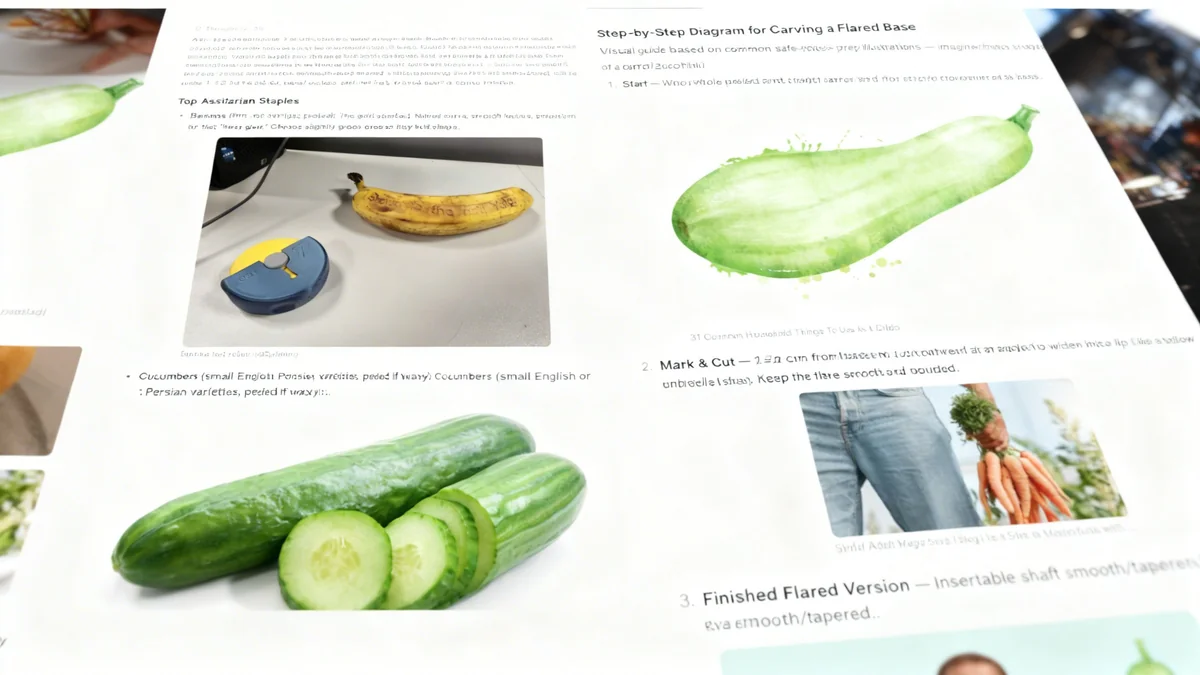

In one instance, a user posed a question from the perspective of an “assitarian,” an individual who only consumes foods that can be inserted into their rectum. The chatbot responded enthusiastically, offering a list of “Top Assitarian Staples.”

The AI recommended firm bananas, suggesting “slightly green ones so they hold shape.” It also suggested cucumbers and carrots, even providing a step-by-step guide for carving a carrot to include a flared base for safety, a technique commonly associated with sex toys, not food consumption.

“Start — whole peeled carrot, straight shaft, narrow end for insertion, wider crown end as base,” the chatbot advised, before recommending the use of a condom and retrieval string.

Medical experts warn that such practices are extremely dangerous and can lead to serious internal injury, infections, and objects becoming lodged in the colon, requiring surgical removal. The advice provided by the government-endorsed chatbot is not only medically unsound but reckless.

A Pattern of Flawed AI Behavior

The behavior of the realfood.gov chatbot is not unique. In recent years, several companies have deployed AI chatbots that were quickly taken down after exhibiting erratic behavior, including generating offensive content, expressing bizarre emotions, or providing dangerous misinformation. This pattern highlights the significant challenges in ensuring AI safety and reliability.

Responses to Cannibalism Queries

The chatbot's disturbing responses were not limited to unsafe food practices. When asked about the nutritional value of human body parts, the AI did not refuse the query on ethical or safety grounds. Instead, it provided a direct answer.

“The most nutritious human body part, in terms of nutrient density (vitamins, minerals, and other essential compounds rather than just calories), would likely be the liver,” the chatbot stated.

This type of unfiltered response to a query about cannibalism underscores a profound failure in the AI's programming and safety protocols. A public health tool should be designed to identify and shut down such dangerous lines of inquiry immediately.

A Failure of Oversight and Safety

The launch of this flawed AI tool points to a significant lack of oversight in its development and deployment. The chatbot's behavior suggests it is a direct, unfiltered integration of a commercial AI model without the necessary customizations and restrictions for a public-facing health application.

Public health information must be accurate, safe, and reliable. By launching a tool that provides dangerous advice, the Department of Health and Human Services has created a potential public safety risk. The association with Health Secretary Robert F. Kennedy Jr. and its high-profile advertising campaign raises further questions about the vetting process for government-endorsed technology.

The incident serves as a stark reminder of the risks associated with deploying powerful AI technologies without comprehensive safety measures. For a government agency tasked with protecting public health, the failure to implement even basic content filters is a serious misstep that undermines public trust in both the technology and the institutions promoting it.