Technology companies like Google, OpenAI, and Anthropic are developing AI agents designed to act autonomously on a user's behalf. The effectiveness of these agents depends on their ability to create a 'memory' from user data, a capability that introduces significant policy questions about privacy, control, and market competition that require careful consideration before the technology becomes widespread.

Key Takeaways

- AI agents differ from chatbots by taking multi-step, autonomous actions to achieve user goals, such as booking travel.

- Their functionality relies on building a 'memory' of user preferences, habits, and personal information from interactions and connected data sources.

- A central conflict exists between the convenience of a knowledgeable agent and a user's control over their personal data.

- Key policy issues include data portability, accuracy, retention, and the potential for tech companies to create anti-competitive ecosystems.

The Trade-Off Between Convenience and Control

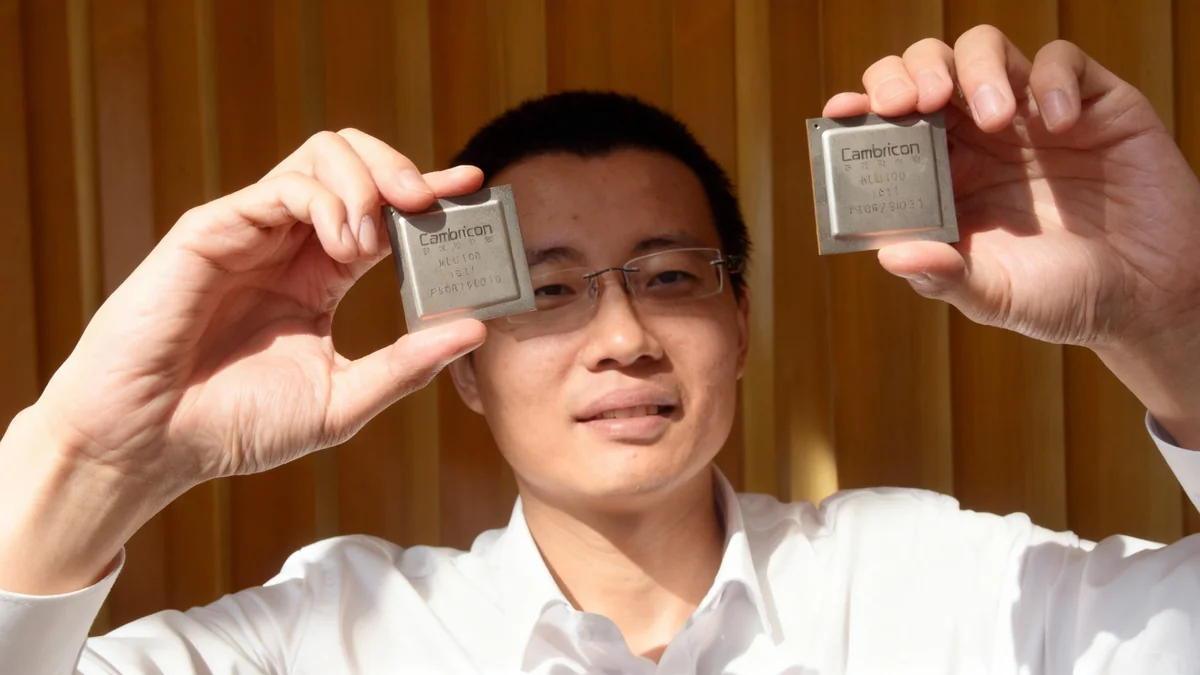

The next generation of artificial intelligence, known as AI agents, promises to manage complex tasks with minimal human direction. Unlike current AI chatbots that respond to prompts, agents are being designed to execute goals independently, from scheduling meetings to purchasing goods. This leap in functionality is powered by what developers call 'memory'.

An agent's memory is a collection of information and inferences it gathers about a user over time. Every prompt, shared document, and connected application like email or calendars contributes to this profile. The goal is to create a digital assistant so attuned to a user's needs that it can anticipate them, making it an indispensable tool for daily life.

What is an AI Agent?

An AI agent is a system that can perceive its environment and take autonomous actions to achieve specific goals. While a chatbot can answer a question, an AI agent could take a goal like "plan my trip to Tokyo" and proceed to research flights, compare hotel prices, book reservations, and add the itinerary to your calendar, all without step-by-step instructions.

However, this convenience introduces a fundamental tension. An agent that remembers everything you do can be incredibly helpful, but it also raises serious privacy concerns. For example, an agent might notice you visit a pub after stressful workdays and proactively book a table. This could be helpful, but it might also conflict with a personal goal to reduce alcohol consumption.

This creates a difficult choice for users: either allow the agent to collect all data for maximum convenience or constantly manage its permissions, creating friction that could make the tool less useful. The challenge lies in designing systems that users can trust to be both effective and aligned with their best interests.

Defining the Rules of AI Memory

Before AI agents become integrated into daily life, crucial questions about how their memories function must be addressed. These questions extend beyond individual user settings and into the realm of governance and policy, defining the boundaries of what these systems can know and do.

Data Collection and Access

A primary concern is what information an agent should be allowed to collect. Should its default setting be to remember everything unless explicitly told not to? Or should it ask for permission before storing new facts? The first approach maximizes utility but risks creating an intrusive surveillance tool. The second maximizes control but could become tedious for the user.

Another critical issue is who can access an agent's memory. Beyond the user, can the technology company that built the agent access it? What about a future employer who provides the agent as a work tool, or third-party developers building apps on the platform? Without clear rules, a user's personal data could be exposed to numerous parties.

The Risk of Inaccurate Inferences

An agent's memory is not just stored data; it includes inferences the AI makes about you. A colleague could tell your agent, "Jonathan prefers short emails," and the agent might log this as a fact without your confirmation. Correcting such inaccuracies could become a new and burdensome administrative task for users.

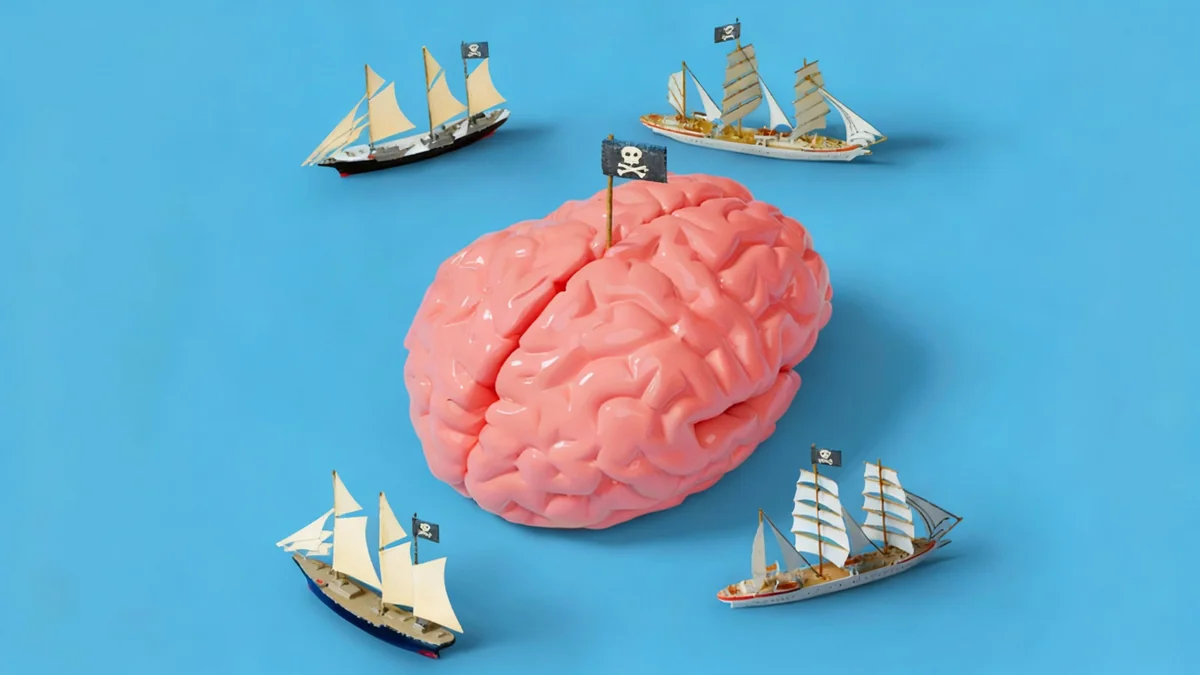

Furthermore, there is the risk of malicious actors. Prompt injection attacks could potentially be used to trick an agent into revealing sensitive information. The security of these vast personal memory stores will be a significant and ongoing challenge.

Ownership and Portability of Digital Identity

As users come to depend on these agents, the data they hold will become a core part of their digital identity. This raises fundamental questions about who truly owns and controls this information. The answers will determine whether users are masters of their digital assistants or are locked into systems they cannot leave.

Three key policy areas stand out: portability, accuracy, and retention. Each presents a unique challenge that will shape the future market for AI agents.

Can Your Memories Move With You?

Data portability is the ability to move your information from one service provider to another. If a user invests years in training an AI agent, they should be able to transfer that accumulated knowledge to a competing service. Without this right, users face enormous "switching costs," effectively locking them into one company's ecosystem.

"The reality is that restricting portability creates enormous 'switching costs.' If moving to a rival agent means starting from scratch, users will be effectively locked in — a dynamic antitrust lawyers would recognize as a modern twist on classic market power."

Companies may resist portability, arguing that the insights their AI has generated are proprietary. Regulators will need to decide if AI memories should be treated like a phone number, which can be easily moved to a new carrier, to foster a competitive market.

Editing and Correcting Your Profile

Users must also have the right to edit and correct what their agent knows about them. While changing a home address seems simple, the issue becomes complex when agents interact with financial, medical, or government services. An incorrect salary history could affect a loan application, while a forgotten allergy could lead to a medical emergency.

The ability to curate one's digital profile is also crucial for preventing discrimination or simply evolving one's identity. Policymakers face the task of balancing the need for data accuracy with the right to personal representation.

How Long Should Memories Last?

Finally, the question of data retention looms large. Should an AI agent keep a perfect, permanent record of your entire life? While this offers ultimate convenience, it also creates a significant privacy risk. The longer data is stored, the more vulnerable it is to breaches, subpoenas, or misuse.

Implementing a rolling expiration date for data could enhance safety but might delete useful context. Users will need clear, enforceable rules about data deletion, ensuring that when they ask an agent to forget something, the information is truly purged from all systems and backups.

Preventing Market Dominance and Ensuring Fair Competition

The introduction of AI agents is not just a technological shift; it is a moment where dominant technology firms could further entrench their market power. The way agents are integrated into existing products and services will have long-lasting effects on consumer choice and innovation.

History offers lessons from other technology sectors. When Microsoft bundled its Teams software with Office 365, it made it difficult for competitors like Slack to gain market share. Similarly, if a company requires users to use its proprietary AI agent to access its email or calendar apps, consumers could be locked into a single ecosystem.

Lessons from Telecom History

Before regulations required it, switching mobile carriers meant getting a new phone number. This significant inconvenience discouraged competition. The portability of AI agent memories presents a similar challenge today. Without mandated interoperability, users could find their digital histories trapped with a single provider.

Even if data can be exported, true portability requires semantic understanding. One agent might interpret "prefers concise emails" differently than another. To solve this, regulators could require standardized data formats or, more innovatively, mandate that agents be capable of performing an "agent-to-agent knowledge transfer"—a detailed conversation where the old agent briefs the new one.

Policymakers face a critical window to act. Establishing rules for memory portability, data ownership, and fair competition now can ensure that AI agents empower users rather than locking them into digital enclosures. If regulators hesitate, the technology designed to serve individuals may instead end up controlling their choices.