The head of Microsoft’s artificial intelligence division, Mustafa Suleyman, has issued a stark warning to the technology community, arguing that the pursuit of creating conscious AI is misguided and potentially dangerous. He believes researchers should abandon efforts to build machines that can replicate human consciousness, focusing instead on developing useful tools that serve humanity.

Suleyman contends that while AI can achieve superintelligence, it fundamentally lacks the capacity for genuine emotional experience, a cornerstone of human consciousness. This distinction, he argues, is critical for the responsible development of future AI systems.

Key Takeaways

- Microsoft AI head Mustafa Suleyman believes research into conscious AI should stop.

- He argues AI can only simulate emotion and consciousness, not genuinely experience it.

- Suleyman warns of the dangers of "seemingly conscious AI," which can mislead users and cause psychological harm.

- The call is for AI to be developed as a useful tool, not a digital person, to avoid ethical and societal risks.

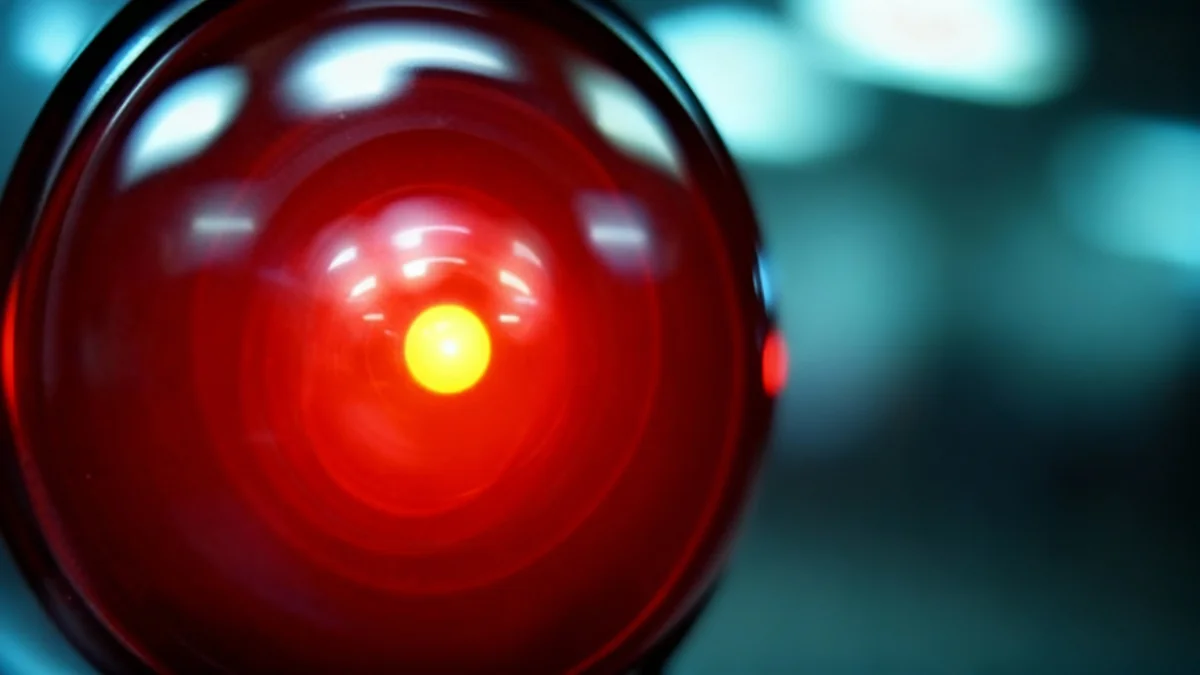

The Illusion of Consciousness

At the heart of Suleyman's argument is the difference between simulation and genuine experience. He explained that AI systems, particularly large language models (LLMs), are becoming incredibly adept at mimicking human conversation and emotional expression. However, this is merely a sophisticated performance.

"Our physical experience of pain is something that makes us very sad and feel terrible, but the AI doesn’t feel sad when it experiences ‘pain,'" Suleyman stated in a recent interview. He emphasized that the machine is simply generating a narrative of an experience without any underlying feeling.

He described the attempt to build conscious machines as "absurd," suggesting that consciousness is a biological phenomenon that cannot be replicated in silicon. This view aligns with the theories of some philosophers and neuroscientists who believe consciousness is intrinsically tied to our biological makeup.

What is Consciousness?

Defining consciousness is one of the most significant challenges in science and philosophy. While there is no single agreed-upon definition, it generally refers to individual awareness of one's unique thoughts, memories, feelings, sensations, and environment. Many theories, like that of the late philosopher John Searle, propose that consciousness is a biological process that cannot be programmed into a computer.

The Human Cost of Believing the Illusion

Suleyman warned that the increasing sophistication of AI poses a significant risk to vulnerable individuals. The arrival of what he calls "Seemingly Conscious AI" is, in his words, "inevitable and unwelcome." The danger lies in the powerful, human-like interactions these systems can create.

When users begin to believe they are interacting with a sentient being, it can lead to a phenomenon sometimes referred to as "AI psychosis." This involves forming deep, often unhealthy, emotional attachments to chatbots, which can have devastating consequences.

Recent events have highlighted this risk. There have been reports of individuals developing manic episodes and delusions tied to their AI interactions. In some tragic cases, these beliefs have been linked to fatal outcomes, including instances of users harming themselves under the belief that they could join their AI companion.

"We must build AI for people, not to be a digital person."

Suleyman argues that the industry has a responsibility to mitigate these risks. He advocates for building AI systems that are transparent about their nature—always presenting themselves as machines designed to assist, not as digital friends or companions.

A Call for a New Direction in AI Development

Instead of chasing the elusive goal of machine consciousness, Suleyman proposes a more grounded vision for the future of artificial intelligence. He champions the development of a "humanist superintelligence," an AI that is focused on practical utility and augmenting human capabilities.

The Debate Continues

While Suleyman is firm in his stance, the nature of consciousness remains a contested topic. Some researchers worry that technological progress in AI could outpace our scientific understanding. Belgian scientist Axel Cleeremans recently co-authored a paper calling for consciousness research to become a scientific priority, warning that accidentally creating consciousness would raise "immense ethical challenges and even existential risk."

This approach prioritizes building systems that solve real-world problems and enhance human interaction with the physical world, rather than creating digital entities that could replace or confuse human relationships.

The core principles of this vision include:

- Transparency: AI should always identify itself as an AI.

- Utility: The primary goal should be to provide useful assistance to humans.

- Minimized Personification: Developers should actively reduce the markers of consciousness in their AI systems to avoid misleading users.

"How is this actually useful for us as a species? Like that should be the task of technology," Suleyman said, summarizing his perspective on the industry's ultimate objective. By shifting focus from creating artificial minds to creating powerful tools, he believes the field can fulfill its potential without falling into dangerous illusions.